Steemit Crypto Academy Contest / S17W6 [SUMMARY]: User Engagement and Retention on Steem

Introduction

Hello steemians,

Welcome to our weekly report dedicated to evaluating entries in the Steemit Engagement Challenge (SEC) Season 17 Week 6 competition. This report highlights the efforts, innovation and engagement of participants who explored the crucial topic of user engagement and retention on the Steem platform.

This week we saw a variety of unique strategies and innovative ideas implemented by members of our community. Our objective through this report is to provide a detailed analysis of the proposals, to measure their effectiveness and to assess the different approaches aimed at strengthening interaction within our network.

We'll also look at how these stakes reflect our users' expectations and how they align with Steem's long-term goals for user engagement and retention. This report aims not only to recognize significant contributions but also to offer constructive feedback that can guide our participants in their future initiatives on the platform.

Join us to discover this week's highlights, where innovation meets engagement, and where every contribution brings us one step closer to our common goal: creating a more engaged and sustainable Steem community.

Participation statistics

During the sixth week of the seventeenth season of the SEC, focused on user engagement and retention on the Steem platform, we welcomed 17 participants. This strong turnout demonstrates the community's keen interest in enhancing interactions and commitment within our network.

All 17 entries submitted were original and complied with the rules, showcasing the creativity and effort of our participants. This adherence to guidelines ensures that the competition maintains high ethical standards and promotes genuine engagement and understanding of the subject matter.

The absence of AI-generated submissions highlights the participants' commitment to authentic and personal contributions, which is crucial for fostering a rich and interactive community dialogue. This success encourages us to continue promoting clear communication about the rules and expectations of our competitions, ensuring fairness and integrity in all entries.

| Total number of entries | Invalid entry | Plagiarized content |

|---|---|---|

Based on the initial interpretation of the data from the provided graphs and the suggested format for presenting these results, here is a tailored analysis:

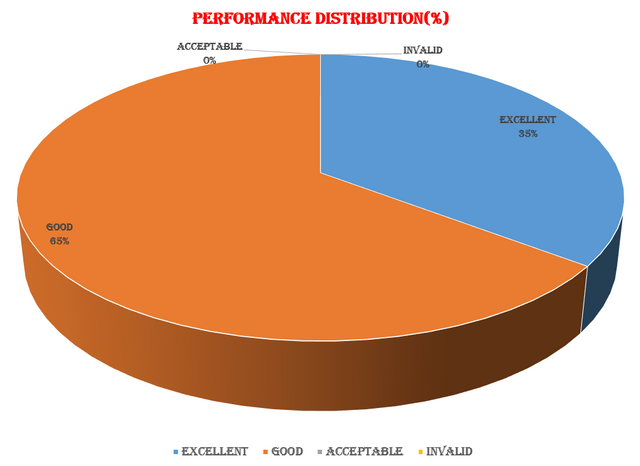

General Performance:

- The majority of participants (65%) were rated as having "Good" performance, suggesting that most met the event expectations with competence and effectiveness.

- A significant proportion (35%) demonstrated exceptional understanding and application of the tasks, rated as "Excellent".

- Interestingly, there are no participants rated as "Acceptable" or "Invalid", indicating a high overall quality of submissions where each entry either met a good standard or excelled.

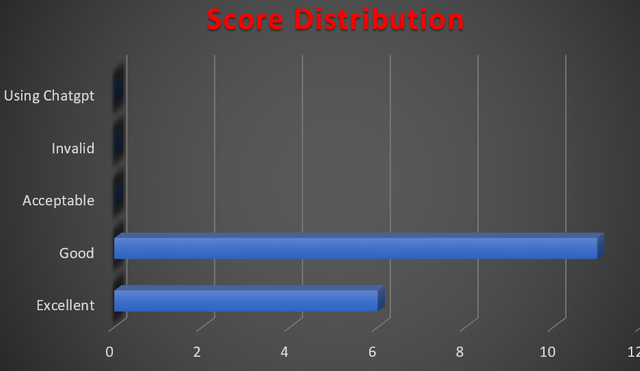

Numerical Distribution of Scores:

- The "Good" category, representing the largest share, includes approximately 11 participants, aligning with the high proportion seen in the performance distribution.

- Participants classified as "Excellent" number around 6, reinforcing the notion of a higher standard for achieving this rating.

- The absence of scores in the "Using ChatGPT," "Invalid," and "Acceptable" categories suggests strict adherence to competition guidelines and a focused submission pool.

Points to Note:

- The results showcase strong engagement and robust performances from participants, with the distribution heavily skewed towards the "Good" and "Excellent" categories.

- The absence of any entries in the lower categories or flagged for using AI tools like ChatGPT indicates good compliance with the rules and high-quality contributions.

- The complete lack of "Invalid" or "Acceptable" scores reflects positively on the relevance and quality of the submissions to the competition requirements.

This interpretation highlights the competitive nature of the event and underscores the participants' commitment to quality and excellence in their submissions.

Top 3 users this week

In our evaluation process, we first assessed the quality of the participants' performances, with a focus on their interactions with fellow contestants.

For choosing the top three participants, we considered their performance scores and how they engaged with others. Additionally, we made a special selection based on a standout article that provided exceptional content on the discussion topic. Here are the top three participants we have acknowledged this week.

| Ranking | Username | Article |

|---|---|---|

| 1 | @dove11 | Link |

| 2 | @bonaventure24 | Link | 3 | @ahsansharif | Link |

Conclusion

In summary, this week's review showcased the remarkable skills and dedication of our participants. Their effective interaction and insightful contributions have significantly enriched our discussions. We commend all competitors for their hard work and commitment, and we are eager to see how they will continue to excel and inspire in upcoming sessions. Congratulations to our top performers, and a big thank you to everyone who played a part in making this week a success.

I feel honoured 🎖 being among the Winwick of this wonderful challenge 🙏 it was indeed a wonderful expedition for me and coming up on top means a lot to me.

Congratulations 🎊 👏 to my fellow winners @dove11 and @ahsansharif let's continue to steem to the moon.

Thanks dear congratulations you too.

Alright 👍

Thank you so much for the selection which will certainly make me a better Steemian who thinks to find ways for making this platform a better place for all and sundry. We need a group to look for the changes that improve things for the betterment of this platform. Thank you once again.

Thank you so much for choosing me as a winner. Thanks crypto academy team for trusting on me. Congrats 🎉 all my fellow. Keep up the good work.