SLC S23W5 - Real-Time Accessibility Feedback App – Implementing Image Text Extraction in Flutter

Hello everyone!

I warmly welcome you to the 5th week of the Steemit Learning Challenge and especially I invite you in the Technology and Development Club where you can share and learn tech.

I am working on a real-time accessibility feedback application. Last week we developed language translation feature which detects the language automatically from the input ad then it translates the text to selected language. Today I will develop a an image extractor feature of this application. This is sometimes a problem that we have an image and text on it and we want to extract that text in just one click but we suffer for this. So this feature will allow the users to extract the text by selecting an image.

The image exatraction feature will have the following attributes:

- Image picking from gallery and camera

- Automatic extraction of text

- Text copy option

- Read aloud option

- Save extracted text as a file and share

- Urdu translation of the extracted text

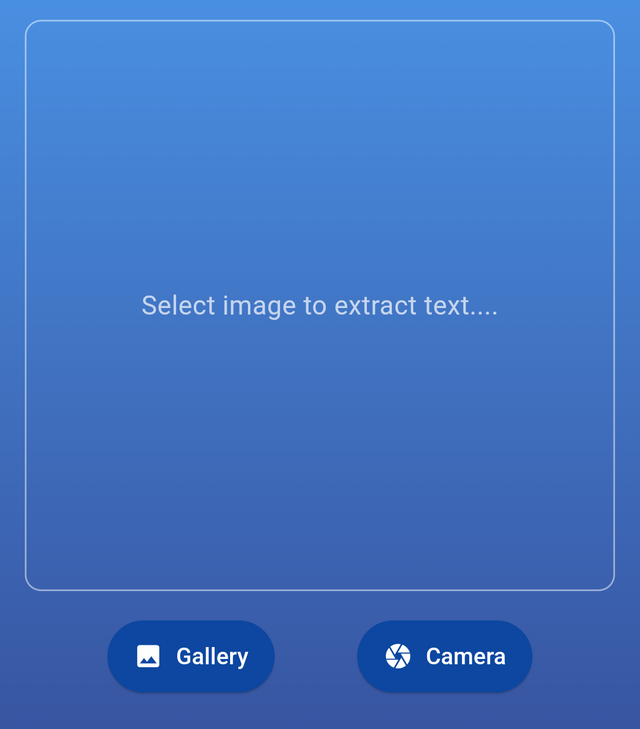

This screen will have the following design elements to make it look better:

- App Bar

- Image input section

- Gallery button to open gallery and select image

- Camera button to open camera and capture image

- Some other useful buttons

- An output section to display the urdu translation

Let us build user interface of the image extraction screen first so that we can implement the functionality accordingly.

This is the app bar of the screen which has gradient background colour with the combination of two colours. The text is in the center of the app bar with the white colour which is making it look more pretty.

Then in the body of the screen I have used a container with the gradient colour for the consistent UI. This is the first design element of the translation screen. As the complete interface is vertical so I have used a column widget. The layout is very simple and easy to use.

This is the section of the interface which helps to pick the image. The image which is picked is displayed in the rounded section of the screen. In order to pick the image I have used image_picker package which allows the applications to to pick image from the gallery and directly from the camera.

There are two buttons to pick the image. One button is Gallery and the other is Camera. If the user has an image in the gallery then the user can choose gallery option and the gallery will be opened and the user can pick the image easily. If user wants to capture the image with the camera and extract text then the user can use Camera button to directly capture and use it to extract texts.

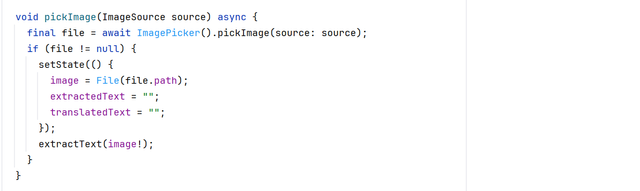

This is the image picker function which helps the application to pick to image. It uses by default function provided by the image_picker package to pick the image. It checks if the image is picked by user according to the source of the image. and then it update the state of the screen and the variables to empty strings. The image picked is passed to the function which is used to extract the text from the image.

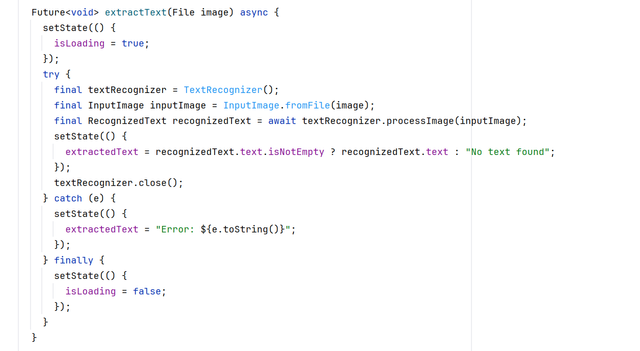

This is the function to extract the text from the image. This function takes the image picked from the user and it uses try and catch method to extract the text from the image. It uses TextRecognizer() function from the flutter package google_mlkit_text_recognition. This is a package by the google machine learning kit which aids in the extraction of the text from the images.

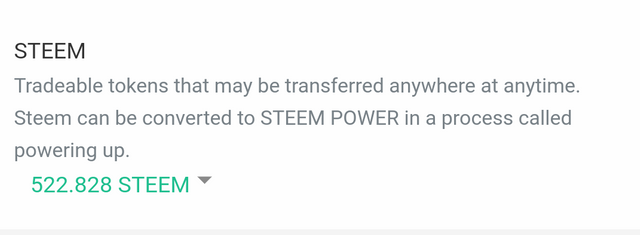

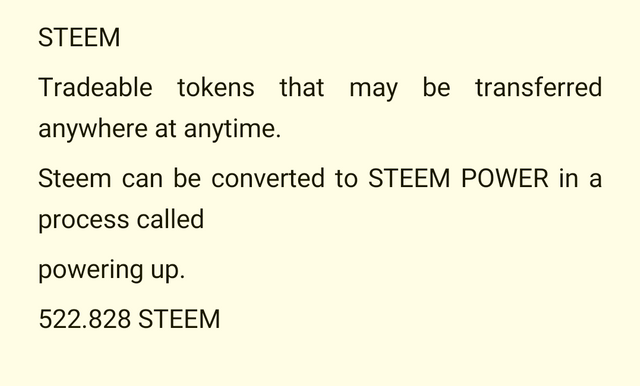

I have selected this image in the application to extract text from this image. This is like an input image.

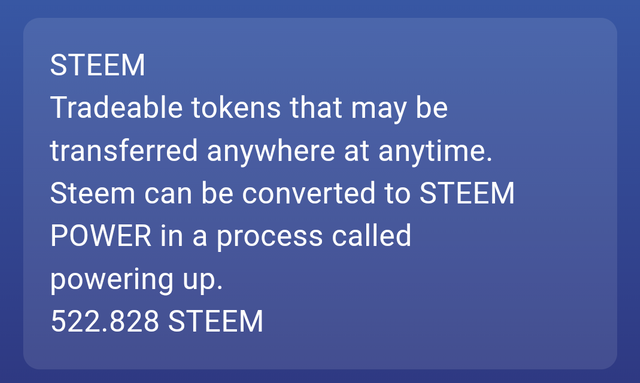

Here you can see the output where the application has extracted the text from the image. It has extracted the text same as it was on the image. It extracts the text at each line according to the arrangements on the image.

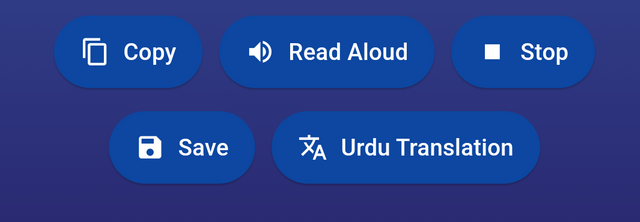

These are the 5 buttons which makes the user experience more great in this application. There is a copy, read aloud, stop, save and translate to Urdu button. Each button is specific for its own work.

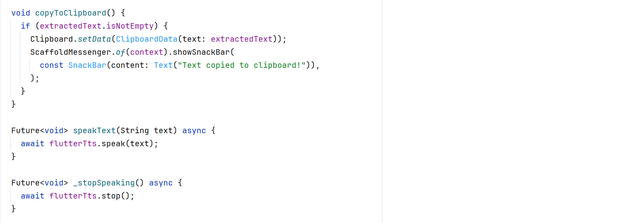

This is the code for the working of the copy button to copy the extracted text from the image to the clipboard. The next small function is to speak the text aloud. It was achieved with the help of the flutter_tts and the next button is to stop speaking this is also achieved by the flutter_tts package.

Here I have translated the text extracted from the image. It is also working fine and translating the extracted text to Urdu language.

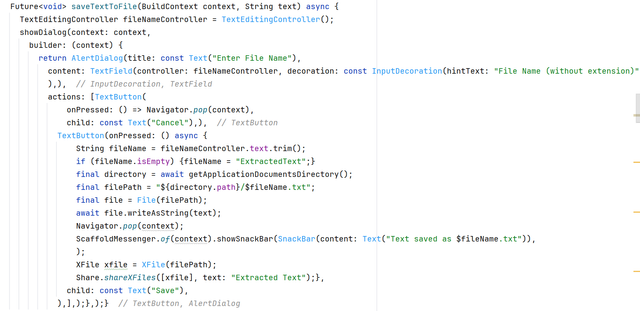

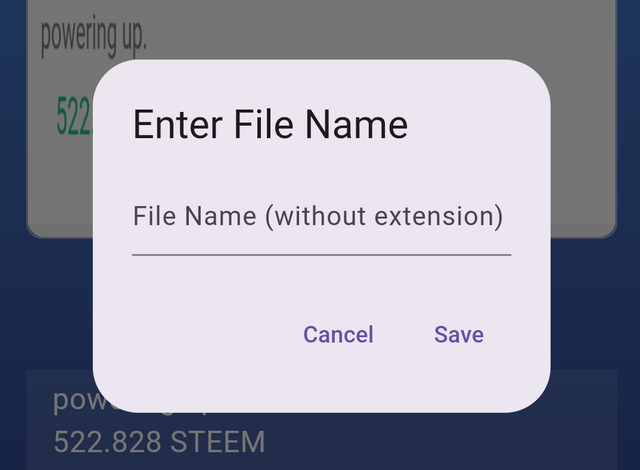

This is the code to save and share the file to external and internal sources. There is the use of the AlertDialog when the user clicks on the save button then it pop ups and requires the name of the file. The user have to enter the file name without the extension. path_provider is used to select the directory and save it on the internal storage. The file includes all the extracted text in it.

This is how the alert dialog appears on the screen for the user.

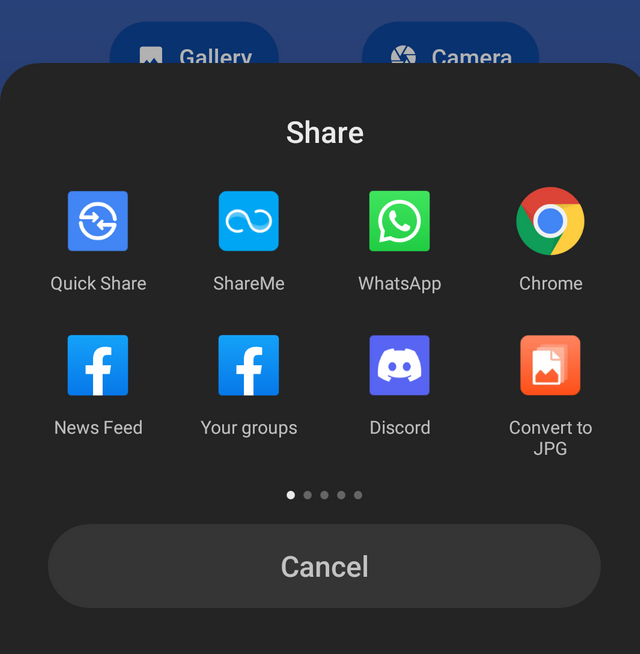

After saving the user sees an automatic pop up to share the file to social media or any other application. The user can share the file to whatsapp directly after extracting the text from the image. This sharing functionality is achieved by using the package share_plus.

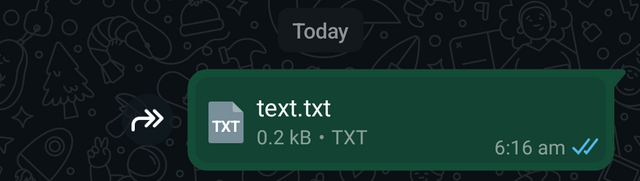

Here you can see that I have shared this text file to whatsapp and it worked successfully.

I have opened the shared file in WPS and it is showing the exact text which I extracted from the image.

So this feature of the application real-time accessibility feedback app helps the user to extract the text from the image in one click and the user can share this text in a file anywhere whether in the social media or in the internal storage.

I am working on this project and It will have a lot of more features but so far I have shared the login and sign up screens with complete user authentication in week 1, issue page and location picker screens with their user interface as well as with the Firebase Authentication, Cloudinary Storage and Firestore Database in week 2, in the week 3 I designed reports tracking page, admin dashboard, issue details page with comment section and made all the pages working. In the week 4 I have developed complete language translation feature of this application which detects the language automatically and translates from the selected languages. The user can listen to the translated text as well. And today I have developed an image extraction feature to extract the text from the image and share it anywhere.

GitHub Repository

In this GitHub repository I have shared login_screen.dart, signup_screen.dart, splash_screen.dart, issue_page.dart, and location_picker_page.dart and other screens for the learning purpose only and as I will progress I will share the other files as well.

What I have learnt

I have learnt these things while working on the project:

Firebase authentication and Firestore integration

Handling user inputs and validation

State management in Flutter while managing the user session and controlling the user inputs through controllers.

Enhanced user experience and UI design with the implementation of the multi-step sign up process. The use of the gradient in the layout to make it look more attractive.

Error handling and debugging. I managed the error handling of the duplicate accounts, invalid credentials and empty fields.

Image uploading and accessing it via Cloudinary.

Storing the data in firestore database.

Implementation of

OpenStreetMapand Nominatim API.Tracking of the reports.

Handling comment section on the basis of the user

role.Language translation.

Image extraction.

What can you share in the club?

Our club is all about technology and development including:

- Web & Mobile Development

- AI & Machine Learning

- DevOps & Cloud Technologies

- Blockchain & Decentralized Applications

- Open-source Contributions

- Graphic Design & UI/UX

Any posts related to technology, reviews, information, tips, and practical experience must include original pictures, real-life reviews of the product, the changes it has brought to you, and a demonstration of practical experience

The club is open to everyone. Even if you're not interested in development, you can still share ideas for projects, and maybe someone will take the initiative to work on them and collaborate with you. Don’t worry if you don’t have much IT knowledge , just share your great ideas with us and provide feedback on the development projects. For example, if I create an extension, you can give your feedback as a user, test the specific project, and that will make you an important part of our club. We encourage people to talk and share posts and ideas related to Steemit.

For more information about the #techclub you can go through A New Era of Learning on Steemit: Join the Technology and Development Club. It has all the details about posting in the #techclub and if you have any doubts or needs clarification you can ask.

Our Club Rules

To ensure a fair and valuable experience, we have a few rules:

- No AI generated content. We want real human creativity and effort.

- Respect each other. This is a learning and collaborative space.

- No simple open source projects. If you submit an open source project, ensure you add significant modifications or improvements and share the source of the open source.

- Project code must be hosted in a Git repository (GitHub/GitLab preferred). If privacy is a concern, provide limited access to our mentors.

- Any post in our club that is published with the main tag #techclub please mention the mentors @kafio @mohammadfaisal @alejos7ven

- Use the tag #techclub, #techclub-s23w5, #country, other specific tags relevant to your post.

- In this first week's #techclub you can participate from Monday, March 17, 2025, 00:00 UTC to Sunday, March 23, 2025, 23:59 UTC.

- Post the link to your entry in the comments section of this contest post. (Must)

- Invite at least 3 friends to participate.

- Try to leave valuable feedback on other people's entries.

- Share your post on Twitter and drop the link as a comment on your post.

Each post will be reviewed according to the working schedule as a major review which will have all the information about the post such as AI, Plagiarism, Creativity, Originality and suggestions for the improvements.

Other team members will also try to provide their suggestions just like a simple user but the major review will be one which will have all the details.

Rewards System

Sc01 and Sc02 will be visiting the posts of the users and participating teaching teams and learning clubs and upvoting outstanding content. Upvote is not guaranteed for all articles. Kindly take note.

Each week we will select Top 4 posts which has outperformed from others while maintaining the quality of their entry, interaction with other users, unique ideas, and creativity. These top 4 posts will receive additional winning rewards from SC01/SC02.

Note: If we find any valuable and outstanding comment than the post then we can select that comment as well instead of the post.

Technology and Development Club Team

https://x.com/stylishtiger3/status/1901142777850712073

Can Flutter be used to build mobile applications for steem blogging platform, something like Steem Mobile, maybe?

Yes we can use flutter framework.

My entry link here:

https://steemit.com/steem-for-bangladesh/@azit1980/steemit-learning-club-s23w5-graphic-design-excellent-id-card-design

My entry link here;

https://steemit.com/techclubs23w5/@sun-developer/slc-s23w5-3d-edit-card-form-learn-how-to-make-using-or-html-css-javascript