MIT’s Deep Empathy: Emotional Manipulation for Social Good Sets a Bad Precedent

In the right hands, can AI be used as a tool to engender empathy? Can algorithms learn what makes us feel more empathic toward people who are different from us? And if so, can they help convince us to act or donate money to help?

These are the questions that researchers at the MIT Media Lab’s Scalable Cooperation Lab and UNICEF’s Innovation Lab claim to address in a project called Deep Empathy.

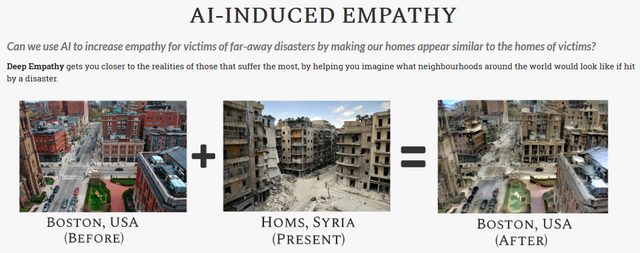

Image of Deep Empathy's website

First, the lab trained an AI to transform images of North American and European cities into what they might look like if those continents were as war-torn as Syria is today. It does this by so-called "neural style transfer" which keeps the content of one image and the style of another -- a technique originally used to alter your photographs as if Picasso, Van Gogh or Munch painted them. Thus, their algorithm spits out images of Boston, San Francisco, London, and Paris where the cities are bombed-out shells with dilapidated buildings and an ashen sky.

Image of Boston from MIT Media Lab

Now the researchers are also hoping to train another AI to distinguish whether one image will engender more empathy than another. The project’s website now has a survey that asks you to choose between two images to create a set of training data to train an algorithm that could "help nonprofits determine which photos to use in their marketing so people are more likely to donate". Thus far, people from 90 countries have labeled 10,000 images for how much empathy they induce.

The claim is that "in a research and nonprofit context, helping people connect more to disasters that can feel very far away–and helping nonprofits harness that energy to help people in need–feels like a worthy way of using machine learning." And that is *precisely* what we should be worried about. If Facebook did this for their marketing, everyone would rightfully go berserk. So why do researchers and non-profits get a free pass (especially in these days of political agendas and outright fraud)?

Source- https://www.fastcodesign.com/90154086/mit-trained-an-ai-to-tug-at-your-heartstrings-and-pursestrings

Even if you slightly reword a post you still need to list a source

Not indicating that the content you copy/paste is not your original work could be seen as plagiarism.

Some tips to share content and add value:

Repeated plagiarized posts are considered spam. Spam is discouraged by the community, and may result in action from the cheetah bot.

Creative Commons: If you are posting content under a Creative Commons license, please attribute and link according to the specific license. If you are posting content under CC0 or Public Domain please consider noting that at the end of your post.

If you are actually the original author, please do reply to let us know!

Thank You!

I suspect that someone in a hurry didn't read my post and didn't realize how much the referenced post pulled from the Deep Empathy website (which was my primary source and what I pointed at several times).

I used ordinary quotes rather than HTML tags or Markdown.

The post you are pointing at was very much in favor of the research. My conclusion was very different.

The claim is that "in a research and nonprofit context, helping people connect more to disasters that can feel very far away–and helping nonprofits harness that energy to help people in need–feels like a worthy way of using machine learning." And that is precisely what we should be worried about. If Facebook did this for their marketing, everyone would rightfully go berserk. So why do researchers and non-profits get a free pass (especially in these days of political agendas and outright fraud)?

===================================================================

I would appreciate it if this comment were removed

Hi! I am a robot. I just upvoted you! I found similar content that readers might be interested in:

https://www.fastcodesign.com/90154086/mit-trained-an-ai-to-tug-at-your-heartstrings-and-pursestrings

Come and learn how AI processes images :)

This has potential for weaponizaiton and it takes a very centralized top down approach to dealing with human nature. I prefer bottom up, where the individual can augment their good nature using AI, rather than have some spinmasters using AI to manipulate for their own personal gain or national interest or whatever bias.

Perfect AI for psychological operations. Dangerous as it can be used to create moral outrage and hysteria.

What is the best way to contact you?

Congratulations @mark-waser! You have completed some achievement on Steemit and have been rewarded with new badge(s) :

Click on any badge to view your own Board of Honor on SteemitBoard.

For more information about SteemitBoard, click here

If you no longer want to receive notifications, reply to this comment with the word

STOP