The value path of big data

Speaking of big data, the first impression is that the 4V proposed in the book "Big Data Era", massive data volume (volume), fast data flow and dynamic data system (velocity), diverse data types (variety) ) And huge data value (value).

The first three Vs directly describe the characteristics of the data itself. Numerous companies in the big data industry have introduced various storage and data processing solutions to deal with the technical challenges brought about by big data. The early gold prospectors made a lot of money. A large number of computer rooms full of data are left. But what about good value?

The last V is not ideal.

Take Palantir, the most famous company in the industry, as an example. His founder is the famous Silicon Valley investment and entrepreneurial godfather, paypal founder Peter Thiel. Its first and largest customer is the CIA, which assists in the fight against terrorism. It is said that relying on their assistance, the CIA found Bin Laden's trace. Palantir became famous for this. Its latest round of financing is USD 450 million, and the company is valued at USD 20 billion. It is a startup company second only to uber, airbnb and Xiaomi.

But some recent news broke a series of Palantir's problems. Last year, at least three important customers terminated their contracts, including Coca-Cola, America Express, and Nasdaq. On the one hand, these customers complained that the company charges too high, which can be as high as $1 million per month, which is far from worth it. Moreover, the cooperation between the client and the company's young engineers is a headache.

Palantir last announced that its "book value" for the entire year of last year was US$1.7 billion, but in fact the final revenue was only US$450 million. The reservation value is the cost that the customer may have to pay, including many trial periods, and the contract value of free users. The huge gap between these two data shows that very few customers turn out to be paying users.

Palantir's situation just shows that it is not easy to obtain the huge data value of big data.

There is indeed a lot of value hidden in big data, but the realization of value does not lie in the data analysis itself, but in the collision of data and business scenarios.

Several problems faced in Palantir's data practice:

The value of data is closely related to industry scenarios. Palantir is good at catching bad guys. Through a large number of data associations, it discovers abnormalities in the business, and then realizes the value of data through abnormal control. Such scenarios are more suitable in security, finance and other fields. When extended to other scenes, the effect is often unsatisfactory. The involvement of in-depth industry scenarios often requires in-depth involvement in the industry, which is costly and has a long cycle.

The data and analysts themselves are also costs, the cost of acquiring big data, the high cost of data scientists, the opportunity cost of failure in analytical work, and the degree to which the value of data is reflected. These all have a direct impact on big data projects. Whether these cost-to-value ratios can be controlled within a certain range, and in the long run, whether there is a linear decrease in cost is also a key factor in corporate decision-making.

The skills and thinking abilities of engineers, the training and retention of data scientists are not easy, the training of young engineers, the learning curve and cost are all points that need to be considered.

Several milestones on the road to data value

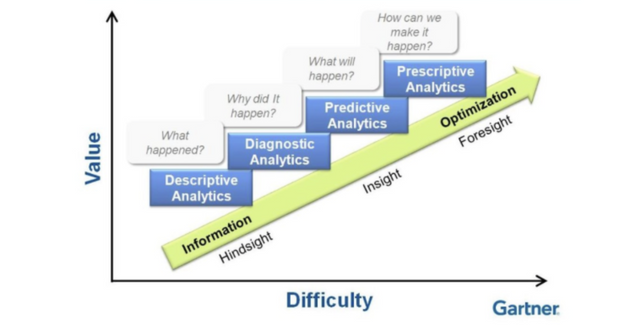

Gartner has a very simple and clear data analysis and difficulty division model, which defines four levels from the difficulty of data analysis to the realization of data value. The definition of these four levels is also very suitable to be regarded as the four milestones in our data value exploration.

Descriptive, the analysis to solve what happened, is a relatively simple analysis. Descriptive analysis usually requires the precipitation of big data into smaller, higher-value information, through aggregation to provide insights and reports on an event that has occurred.

Diagnostics, on the basis of event data description, provides in-depth analysis of the cause, usually requires more dimensional data, longer data span, and discovers the relationship between events and data through correlation analysis.

Predictive (Predictive), predictive analysis through a series of statistics, modeling, data mining and machine learning techniques to learn recent and historical data, to help analysts make certain predictions about the future.

Prescriptive, prescriptive analysis breaks through the analysis and extends to the execution stage. It combines prediction, deployment, rules, multiple predictions, scoring, execution and optimization rules, and finally forms a closed-loop decision management capability.

Past practice has shown that more than 75% of data analysis scenarios are descriptive analysis. Most of the data warehouses and BI systems that have been established by enterprises can be attributed to this scenario. Daily operation reports, operational dashboards, cockpits, etc. belong to this scenario. The realization of this kind of application. Diagnostic and predictive analysis applications are more used in specific scenarios such as recommendation and operational abnormality analysis. The scope of use is small and the effects are uneven. The standard analysis scenario directly opens up analysis and execution, which is currently mainly reflected in more specific business scenarios such as autonomous driving and robots. In a business environment, the value of data requires more than just analysis. The real value is obtained through business decision-making and business execution after data analysis.

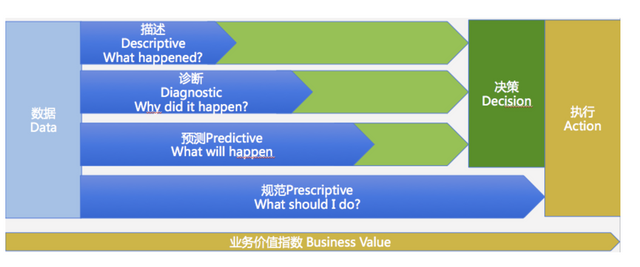

The author uses the following picture to depict the value path of data. The more to the right, the higher the business value index reflected by the data, and the higher the business value reflected.

The light green and dark green parts in the figure are a large number of manual participation processes, which help further manual processing and processing of the previous data analysis process and results. In the IT-led era in the past, these two parts were often undertaken by the IT department, driven by business needs, and the implementation effect was not good, and they were often criticized by the business department. In the era of big data, business departments are deeply involved and gradually become the main users and innovators of data. Through data analysis, business personnel interpret, enrich, judge, make decisions, and finally complete the closed loop of execution to realize the value of data.

As a leading practitioner of the value of big data, TalkingData has set up its own capability map based on this idea: In the course of several years of development, it has realized the accumulation of massive data; statistical analysis, operational analysis, and advertising monitoring, A series of DMP tool platforms further realize the technical realization of description, diagnosis, and predictive analysis; The innovatively established professional data consulting team provides personal services to core customers, assists companies in making business decisions using big data, and escorts the company's big data innovation; In the past year, TalkingData is gradually building an open data ecosystem to bring more extensive data value to customers.