Aicu the Curation Bot ~ Top Picks 18/05 - 02/06

And again a fortnightly summary of the picks made by my curation bot aicu. My bot Aicu only upvotes content similar to the excellent content which is selected by the large curation networks like curie, helpiecake and c-cubed.

I haven't had time to write about the actual decision factors which the bot uses. But as I'll rework some parts of the bot soon, I'll write a post then. The bot uses a lot of sophisticated machine learning to classify each post, but it still makes mistakes. The top 10 and top 15 below are ranked directly using the internal decision machinery of aicu, no further processing on my part.

The General Overview

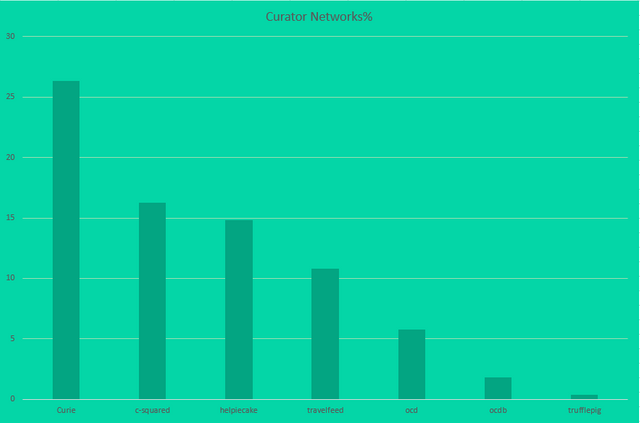

In those two weeks the bot voted on 277 posts from which 42.5% were selected by larger curation networks afterwards. You can find a more detailed breakdown below.

The Curation Networks Selection

There's not much left to say, the following ten posts were selected by aicu and later on by large curation networks and are all excellent posts.

Aicu Gems ~The Unselected

Now let's look at some authors which aicu picked, but were not selected by large curation networks. An excellent selection of articles which you definitely should check out.Improving Aicu

I've been looking at a couple of ways to improve the ability of aicu to detect high-quality content. One way would be by computing the Dirichlet prior on the textual data. This is known as Latent Dirichlet Allocation and a Bayesian approach to topic modelling. Another approach would be the use of a more complex neural network for learning a doc2vec representation. Both approaches should lead to improvements. Right now, I favour the LDA approach.

Another feature which has nothing to do with the content selection is the Natural Language Generation aspect of aicu. I've been working on that aspect to allow the bot to comment on the selected content and telling the user why his post was selected. On top of that, the bot could write the summaries himself. This part consists of two modules.

Text Summary

The bot needs to be able to summarise the post of the user to its key points. Otherwise, it won't be able to create meaningful comments aside from: "Great post" or "Great post about "insert main category"". This part is doable, as there are very cool datasets for generalised document summary. For example, the gigaword dataset. News articles. And each news article has a summary on top. That way and though some generative models, one should be able to train a concise model for that.

Natural Language Generation

My biggest problem is the NLG module. I don't want to use a bullshit generator for generating this. RNN's or HMM's are no option for the core system therefor. My current approach is a linguistically deep NLG module. It uses a logical representation of facts to realise sentences from them. I've covered the rough syntactic structure of the module using a Probabilistic Context-free Grammar model trained on the Penn Treebank. My current issue is that I need to ensure grammatical congruence on the word level. You can't ignore things like number, gender and case when generating the sentences. I might be able to learn that relation and which parts a the syntax tree govern over them. Or if I'm lucky I discover a database for an LFG(Lexical Functional Grammar) somewhere. Anyway, lot's of work to be done.

Aside from those two points, I need to do those updates weekly.

Good one @watersnake101. Idol talaga 😁😁

Congratulations @aicu! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

You can view your badges on your Steem Board and compare to others on the Steem Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPVote for @Steemitboard as a witness to get one more award and increased upvotes!

@aicu, thank you for supporting @steemitboard as a witness.

Click on the badge to view your Board of Honor.

Once again, thanks for your support!

Do not miss the last post from @steemitboard:

Thanks for your upvote and resteem of one of my post, i really appreciate your time.