Why DRM Robots Threaten Culture

In September 2012, the Hugo Awards were presented in Chicago at Chicon 7, the World Science Fiction Convention. This is a venerable event with much more longevity than you might imagine - the 2012 convention was the 70th and the Hugo awards were first presented in 1953 with Isaac Asimov as toastmaster. Just like other top-level events for creative market sectors, this was an important showcase for publishers and authors alike.

People all over the world were avidly watching the broadcast of the ceremony. One of the award winners was Neil Gaiman, recognised for his script for the Doctor Who episode "The Doctor's Wife". Following the showing of a clip from the episode, Gaiman took the podium for the award ceremony to make his acceptance speech.

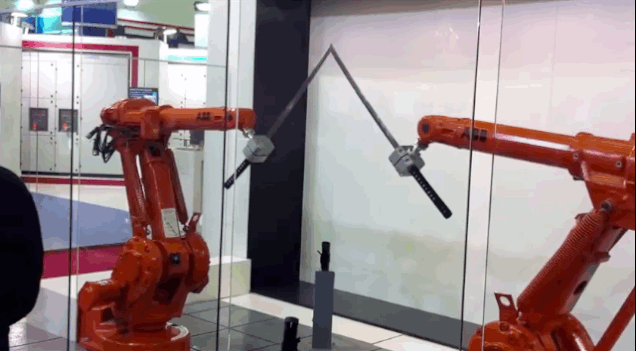

And then the broadcast was cut off. A robot at UStream, presumably using data provided by the BBC, decided on the basis of that short clip that this was an illegal broadcast of Doctor Who and pulled the plug. Worse, it turned out that no-one at the Hugo Awards or at UStream was empowered to turn it back on again.

This was not an isolated problem either. The Democratic National Convention in the USA was also blacked out the same week by an over-eager copyright enforcement robot acting on behalf of just about everyone, and the same thing also happened to NASA's own video of the landing of the Curiosity rover on Mars.

Discretion Quantised

This is an object lesson in the problem with entrusting the policing of copyright to software. Fundamentally, the use of copyrighted materials is a matter of discretion and judgement. The clip shown at the Hugo Awards was legitimate by any measure - a fair use of an extract to illustrate a news item, plus a clip supplied with the approval of the copyright holder. But to know that involved complex human judgement.

People talk of “fair use” but what they actually mean is that we all depend on the exercise of judgement in every decision. Near the “bulls-eye” of copyright where it was meant to apply - the origination of works by specialist producers - most people are clear what it means. But as legal scholar Lawrence Lessig eloquently explains in his excellent book Free Culture in the outer circles of the target we have to make case-by-case judgements about what usage is fair and what usage is abuse. They are inevitably based on diverse, subjective evidence.

When a technologist embodies their or their employer’s view of what’s fair into a technology-enforced restriction, any potential for the exercise of discretion is turned from a scale to a step and freedom is quantised. That quantisation of discretion is always in the interest of the person forcing the issue.

Culture Imprisoned

These technology-imposed restrictions aren't just a problem for now. The natural consequence of having the quantum outlook and business model of one person replace the spectrum of discretion is that scope for new interpretations of what’s fair usage in the future is removed. Future uses of the content involved are reduced to just historic uses the content had at the time it was locked up in the technology wrapper (if that - for example, digital books are generally not able to be loaned to friends, and even when they are it’s treated as a limited privilege).

The law may change, the outlook of society may mature but the freedom to use that content according to the new view will never emerge from the quantised state the wrapper imposes. The code becomes the law, as Lessig again explains in his book Code. While the concept of “fair use” is potentially flexible and forward-looking, “historic use” is ossifying.

Thus the calls for better robots that understand fair use are misguided and pointless. A robot that can successfully implement "fair use" is a piece of science fiction that would be a plot device that would fail to win a Hugo. Any technology that applies restrictions to text, music, video or any other creative medium quantises discretion and inherently dehumanises culture.

We don't need better robots; we need the reform of copyright so that it only applies to producers and not to consumers.

(Originally posted on ComputerWorld UK but lost when they changed their CMS)