RE: Curating for Value: How "Follower Network Strength" Improves Steem Post Ranking

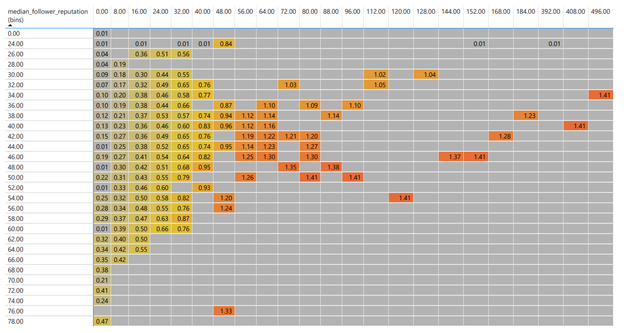

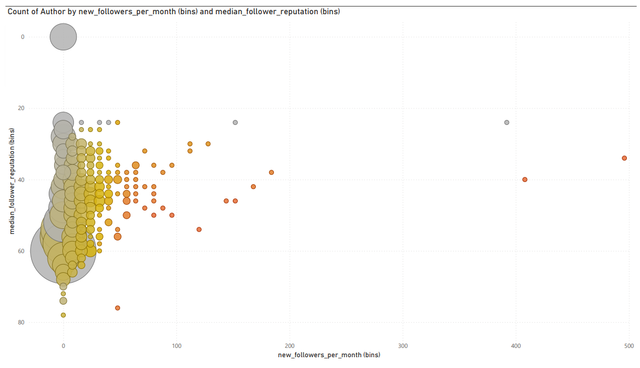

Here are two visualizations of all the accounts that posted or commented during a 24 hour period from early yesterday until early today using the scoring that I had posted last weekend. With my setup, it takes about 6 hours to collect and score a day's worth of accounts. The median score is about 0.225.

Followers / month across the X-axis, median follower reputations along the Y. Grey is the low end of the scale, orange is the top end.

In this visual, the bigger circles have more accounts in the range. The color gradients are the same as above.

Most of the circles outside the main cluster are size=1. The fair-sized circle at [0, 0] was sort of a surprise. I didn't check, but I'm guessing that the big groups with the 61 median rep and low follower counts are nearly all followed by the same account.

I was able to correctly guess the one in the [392-408, 24-26] bucket. I'm guessing that the 0.01 score tells us something about how the account got its ~33k followers.

I think the score already works quite well. The extreme cases at the edges (top and left) will probably be interesting and important for the "settings"... the clusters should be equalised with the "settings" in order to be able to differentiate them better.

I find the value at [48,24] remarkable. Although the follower median is very low and the number of monthly followers is not very high, it has a high score. This must then probably compensate for the low median with an above-average number of followers.

I also find it interesting that the score in the "columns" of the X-axis differs only slightly along the Y-axis. I'm not sure what this means. The score seems to be more influenced by the median than the total number of followers. Does this indicate that over all accounts, the number of new followers could be almost constant, and thus the age of the accounts (for active accounts) could have almost no influence?

Agreed. There's certainly still room for improvement, but this feels much better to me than the previous version. The big thing that I wanted to do was make it harder to get a top-score, and I think I probably accomplished that. IMO, top scores should be pretty rare. In general, I also think that the overall distribution of scores is much better than before, but I can't be sure based on the spotty checking that I did with the May 18 version.

I just updated my python script & PowerBI report so I can collect a day's worth of comments now and score them with both the old and new methods and then compare the visualizations. I'll run it overnight so that over the weekend I'll be able to see what the two methods look like side by side with the same set of accounts from a full day of posting/commenting.

Yeah, I'm not sure what I think about this one. On one hand, 48 followers per month (the real value is 50, but it's in the 48 bin) really is uncommonly high, so the score might not be unreasonable. On the other hand, the follower quality still seems low. I reduced the reputation cut-off back down from 30 to 25 in this version. I might bump it back up, at least part way.

This is kind-of what I had in mind by switching to followers per month. The hope was that an author would need to keep gaining followers with similar reputations in order to maintain their score. If an account stops gaining followers (or slows down), then the score would decay. As implemented, it seems to me that new accounts might have a small advantage, but that might not be too bad. It could potentially give a little bit of a boost to new arrivals.