INCREÍBLE INTELIGENCIA ARTIFICIAL AUTÓNOMA

The darkest secret of artificial intelligence: why does it do what it does?

No matter how good the predictions of deep learning are, no one knows how he reaches his conclusions. This fact is beginning to generate a problem of trust and the tools to solve it are not helping too much. But maybe that's the nature of intelligence itself

by Will Knight | translated by Teresa Woods April 17, 2017

Last year, a strange autonomous car was released on the quiet streets of Monmouth County (USA). The prototype, which was developed by researchers from the chip maker Nvidia, did not look very different from the rest of the autonomous cars, and yet it did not look like anything Google, Tesla or General Motors have done. In addition, it was a test of the increasing power of artificial intelligence (AI). The car did not follow the instructions of an engineer or a programmer. Instead, it depended on an algorithm that had been taught to drive itself by observing a human driver.

Making the car learn to drive under this approach was an impressive feat. But it is also somewhat disconcerting, since it is not clear what is its mechanism for making decisions. The data collected by the sensors of the vehicle go directly into a huge network of artificial neurons that process them. From them, the ordejador decides what will do and sends the corresponding orders to the steering wheel, the brakes and other systems so that they carry them out. The result seems to match the behavior that would be expected of a human driver. But what if one day do something unexpected like hitting a tree, or stay motionless at a traffic light in green? As things stand right now, it might be hard to figure out why he does what he does. The system is so complex that even the engineers who designed it may be unable to isolate the reason for any given action. And you can not ask him: there is no obvious way to design a system so he can explain why he has done (see Blind trust is over, artificial intelligence should explain how it works ).

The mysterious mind of this vehicle points to an increasingly palpable problem of artificial intelligence. The AI ??technology of the car, known as deep learning has been very successful in solving problems in recent years, and is increasingly used in tasks such as generating subtitles, recognizing the voice and translating languages. These same techniques could become capable of diagnosing deadly diseases, making multimillion-dollar stock decisions and transforming entire industries.

But this will not happen (or should not) unless we get techniques such as deep learning to be more understandable to their creators and accountable to users . Otherwise, it will be difficult to predict when failures could occur, which are unavoidable. This is one of the reasons why the Nvidia car is still experimental.

Mathematical models are already being used to help determine who gets parole , who is eligible for loans and who is hired to fill a vacant position . If you could access these mathematical models, it would be possible to understand their reasoning. But banks, the army and employers are focusing on even more complex machine learning approaches that could be totally inscrutable. Deep learning, the most common of these approaches, represents a fundamentally different way of programming computers. "It's already a relevant problem, and it's going to be much more in the future"says Tommi Jaakkola, professor at the Massachusetts Institute of Technology (MIT), who works on machine learning applications, adding:" Whether it's an investment decision, medical or military, nobody wants to have to depend on a 'black box' method ".

There are already arguments arguing that the ability to interrogate an artificial intelligence system about how it arrived at its conclusions represents a basic legal right . As of the summer of 2018, the European Union could force companies to offer a response to their users about the decisions reached by automated systems. This may be impossible, even for apparently simple systems , such as apps and web pages that use deep learning to show ads and recommend songs. The computers that run those services have self-programmed and we do not even know how. Even the engineers who develop these apps they are unable to fully explain their behavior.

This raises some disturbing questions. As the technology advances, we could cross a threshold from which using the AI ??requires a leap of faith . Of course, humans can not always really explain our cognitive processes either, but we find ways to trust and judge people intuitively. Is that also possible with machines that think and make decisions differently from humans? We had never developed machines that work without their creators understanding how. Do we expect to get along and communicate well with intelligent machines that could be unpredictable and inscrutable? These questions pushed me to embark on a journey to the frontier of research on artificial intelligence algorithms, from Google to Apple, through many sites in between, including a meeting with one of the great philosophers of our time.

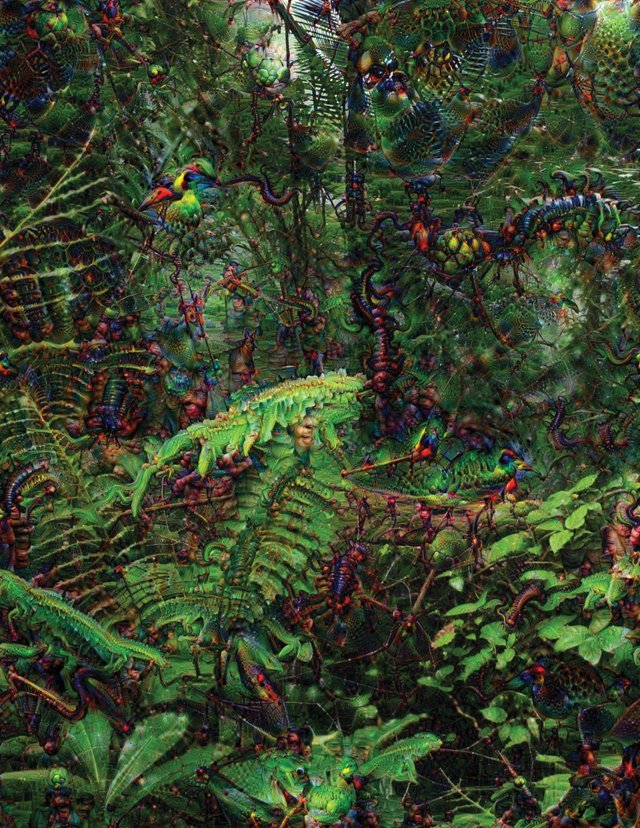

Photo: The artist Adam Ferriss created this image, and the one below, with the use of Google Deep Dream, a program that adjusts an image to simulate the pattern recognition capabilities of a deep neural network. The images were produced by an intermediate layer of the neural network. Credit: Adam Ferriss.

In 2015, a group of researchers from the Mount Sinai Hospital in New York (USA) wanted to apply deep learning to the vast database of hospital patient records. This data set includes hundreds of variables, coming from the results of tests, medical consultations and much more. The resulting program, which the researchers called Deep Patient, was trained with data from around 700,000 individuals, and when tested with new histories, it proved incredibly good at preempting diseases. Without any training by experts, Deep Patient had discovered hidden patterns within the hospital data that seemed to indicate when people were about to develop a wide range of disorders, including liver cancer. There are many methods to predict diseases from a patient's medical history that perform "fairly well," according to team leader Joel Dudley. But, he adds: "This was just much better ."

"We can make these models, but we do not know how they work."

At the same time, Deep Patient is somewhat disconcerting. It seems to anticipate the onset of psychiatric disorders such as schizophrenia quite well. But since it 's a disorder that doctors have a hard time predicting, Dudley did not understand the results of his algorithm. And still does not do it. The new tool gives clues about how it does it. If something like Deep Patient can help doctors, it would be ideal to share the reasoning that led to its prediction to make sure it is accurate and able to justify, for example, a change in the medication a patient receives. With a somewhat sad smile, Dudley says: "We can develop these models, but we do not know how they work."

Artificial intelligence has not always been like that. From the beginning, there have been two schools of thought regarding what is understandable, or explainable, that should be. Many believed that it made sense to develop machines that reason according to rules and logic, which would make transparent its internal functioning for anyone who wanted to examine the code. Others felt that intelligence would advance more if the machines were inspired by biology, and learned by observation and experience. This meant inverting computer programming. Instead of a programmer writing the commands to solve a problem, the program generates its own algorithm based on sample data and the desired result. The machine learning techniques that evolved to becomeToday's most powerful AI systems followed the second path: basically, the machine self-programmed.

At first, this approach did not have many applications, and during the 1960s and 1970s it still occupied the periphery of the field. Then the computerization of many industries and the arrival of big data renewed interest. That inspired the development of more powerful machine learning techniques, especially new versions of a technique known as artificial neural network. By the end of the 1990s, neural networks could automatically digitize handwritten characters .

But it was not until the beginning of this decade, after several ingenious adjustments and refinements, when very large neural networks, or "deep", began to offer drastic improvements in automated perception. Deep learning is responsible for the current explosion of AI . It has endowed computers with extraordinary powers, such as the ability to recognize spoken words almost as well as any person, something too complex to be coded by hand. Deep learning has transformed the vision of machines and greatly improved automated translation. It already contributes to making all kinds of key decisions in medicine, finance, manufacturing and much more.

please follow me and upvote me back