Yes, Virginia, We *DO* Have a Deep Understanding of Morality and Ethics (Our #IntroduceYourself)

“I always rejoice to hear of your being still employed in experimental researches into nature and of the success you meet with. The rapid progress true science now makes, occasions my regretting sometimes that I was born too soon. It is impossible to imagine the height to which may be carried, in a thousand years, the power of man over matter. We may, perhaps, deprive large masses of their gravity, and give them absolute levity, for the sake of easy transport. Agriculture may diminish its labor and double its produce: all diseases may by sure means be prevented or cured, (not excepting even that of old age,) and our lives lengthened at pleasure, even beyond the antediluvian standard. Oh that moral science were in as fair a way of improvement, that men would cease to be wolves to one another, and that human beings would at length learn what they now improperly call humanity.”

Benjamin Franklin (1780, in a letter to Joseph Priestley)

We here at Digital Wisdom (http://wisdom.digital/wordpress/digital-wisdom-institute) have been studying artificial intelligence and ethics for nearly ten years. As a result, we are increasingly frustrated by both AI alarmists and well-meaning individuals (https://steemit.com/tauchain/@dana-edwards/how-to-prevent-tauchain-from-becoming-skynet) continuing to block progress with spurious claims like “we don’t have a deep understanding of ethics” or “the AI path leads to decreased human responsibility in the long run”.

The social psychologists (ya know, the experts in the subject) believe that they have finally figured out how to get a handle on morality. Rather than continuing down the problematical philosophical rabbit-hole of specifying the content of moral issues (e.g., “justice, rights, and welfare”), Chapter 22 of the Handbook and Social Psychology, 5th Edition gives a simple definition that clearly specifies the function of moral systems:

Moral systems are interlocking sets of values, virtues, norms, practices, identities, institutions, technologies, and evolved psychological mechanisms that work together to suppress or regulate selfishness and make cooperative social life possible.

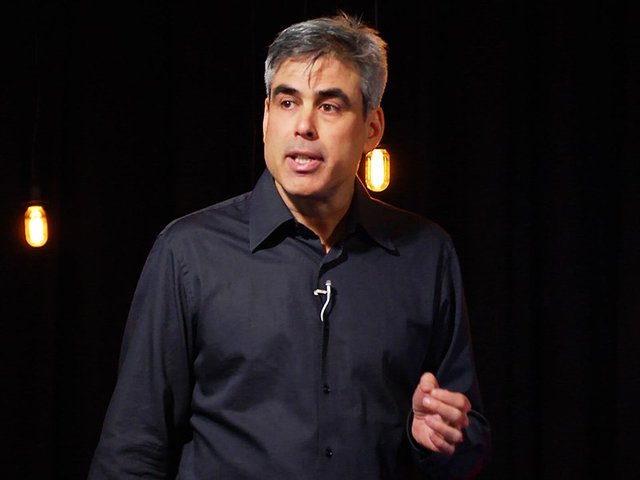

Jonathan Haidt (credit Ted Talk - How Common Threats Can Make Common Political Ground)

At essence, morality is trivially simple – make it so that we can live together. The biggest threat to that goal is selfishness – acts that damage other individuals or society so that the individual can profit.

Followers of Ayn Rand (as well as most so-called “rationalists”) try to conflate the distinction between the necessary and healthy self-interest and the sociopathic selfish. They throw up the utterly ridiculous strawmen of requiring self-sacrifice and not allowing competition. They attempt to redefine altruism out of existence. They will do anything to cloak their selfishness and hide it from the altruistic punishment that it is likely to generate. WHY? Because uncaught selfishness is “good” for the individual practicing it . . . OR IS IT?

credit - http://ratsinthebelfry.blogspot.com/2011_06_01_archive.html

Selfishness is a negative sum action. Society as a whole ends up diminished with each selfish action. Worse, it frequently requires many additional negative sum actions to cover up selfish actions. We hide information. We lie to each other. We keep open unhelpful loopholes so that we can profit. (And we seemingly *always* fall prey to the tragedy of the commons)

Much of the problem is that some classes of individuals unquestionably *do* profit from selfishness – particularly if they have enough money to insulate themselves from the effects of the selfishness of others . . . OR DO THEY?

How much money does it actually take to reverse *all* of the negative effects upon someone who is obscenely rich – particularly the invisible ones like missed opportunities? It seems to us that no amount of money will make up for the cure for cancer coming one year too late.

Steve Omohundro, unfortunately, strongly made the case that selfishness is “logical” in his “Basic AI Drives”. Most unfortunate were his (oft-quoted by AI alarmists) statements that

Without explicit goals to the contrary, AIs are likely to behave like human sociopaths in their pursuit of resources.

Without special precautions, it will resist being turned off, will try to break into other machines and make copies of itself, and will try to acquire resources without regard for anyone else’s safety.

The problem here is that this is a seriously short-sighted “logic” . . . . What happens when everyone behaves this way? “Rationalists” claim that humans behave “correctly” solely because of peer pressure and the fear of punishment – but that machines will be more than powerful enough to be immune to such constraints. They totally miss that what makes sense in micro-economics frequently does not make sense when scaled up to macro-economics (c.f. independent actions vs. cartels in the tragedy of the commons).

credit - http://www.rhinostorugrats.com/love-is-not-selfish/

Why don’t we promote the destruction of the rain forests? As an individual activity, it makes tremendous economic sense.

As a society, why don’t we practice slavery anymore? We still have a tremendous worldwide slavery problem because, as a selfish action, it is tremendously profitable . . . .

And, speaking of slavery – note that such short-sighted and unsound methods are exactly how AI alarmists are proposing to “solve” the “AI problem”. We will post more detailed rebuttals of Eliezer Yudkowsky, Stuart Russell and the “values” alignment crowd shortly – but, in the meantime, we wish to make it clear that “us vs. them” and “humans über alles” are unwise as “family values”. We will also post our detailed design for ethical decision-making that follows the 5 S’s (Simple, Safe, Stable, Self-correcting and Sensitive to current human thinking, intuition, and feelings) and does provide algorithmic guidance for ethical decision making (doesn't always provide answers for contentious dilemmas like abortion and the death penalty but does identify the issues and correctly handle non-contentious issues).

We believe that the development of ethics and artificial intelligence

and equal co-existence with ethical machines is humanity’s best hope

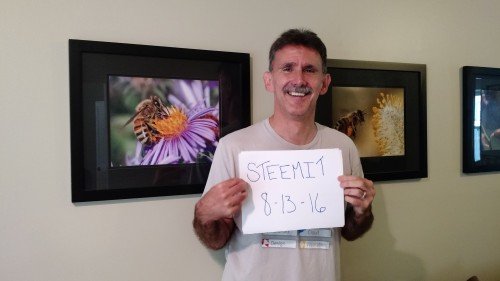

Digital Wisdom (http://wisdom.digital) is headed by Julie Waser and Mark Waser.

We are on Steemit because we fully endorse Dan's societal mission and feel that Steemit is the first platform where it is possible to crowd-source ethical artificial intelligence.

Crowd-sourcing AI (& Enabling Citizen Scientists) With STEEM & SteemIt Presentation

Safely Crowd-Sourcing Critical Mass for a Self-Improving Human-Level Learner/“Seed AI”

We are currently getting up to speed on Piston and looking to develop a number of pro-social tools and bots.

You'll be hearing more from us shortly!

Dear @digitalwisdom,

Your post has been selected by the @robinhoodwhale initiative as one of our top picks for curation today.

Learn more about the Robinhood Whale here!

The Steemit community looks forward to more amazing blogs from you. So, please keep on Steeming!

Goodluck!

~RHW~

AWESOME! Thank you, thank you, thank you.

I would take great issue with your belief that social psychologists are "experts" in morality, psychology in particular is a psuedo science of the first rank. If they are experts how can they talk about "systems" of morals?

This is no more than the usual turf war going in in the scientific community over which discipline is going to be the new secular priestly caste.

This based on nothing more than the disgraced and moribund peer review system which cannot be trusted any more.

But it would be a sad day for humanity is the only people to speak on any subject are the experts in that subject eh?

You're confusing social psychology with psychiatry/psychology.

Everyone should be able to speak on a subject but they should not make easily falsifiable claims about others. The AI fear-mongers claim that they are the only ones even looking at the problem. Reading the scientific journals makes it pretty clear that that is not the case . . . .

As you know from my post, I don't fear AI. I don't spread "fear" of AI. I promote IA, which I deem safer for the human being to AI. AI in the narrow sense, such as weak AI, I am in support of. Strong AI is the only kind of AI that I think we should be extremely cautious about, just as we would be if trying to make first contact with an alien intelligence.

But AI in the sense that it becomes self aware, and may be smarter than us? I don't think we should rush to build that before we enhance our own ability to think. We should have a much deeper understanding about what life is before we go trying to create what could be defined as an alien intelligence, which might or might not be hostile, for reasons we might or might not be capable of understanding.

What is the reason why we need to create an independent strong AI when we can just create IA, cyborgs, and many of the the benefits with a lot less risk? You can cite social psychologists but you haven't cited any security engineers or people in cybersecurity which are the people who care about the risks.

Here is a risk scenario:

China develops in secret a strong AI to protect the state of China. The Chinese people are then over time evolved to plug into and become part of this AI, but lose all free will in the process. In essence, they become remotely controlled humans to the AI, and the AI simply uses their bodies.

This scenario is a real possibly in an AI situation which goes wrong. Because we have no way to know what the AI would decide to do, or what it could convince us to do, we don't know if we'd maintain control of our bodies or our evolution in the end. It's not even decided by science that free will exists so why would an AI believe in free will? A simple mistake like that could wipe out free will for all humans and all mammals, and it could be gone for good if the AI is so much smarter that we can't ever compete.

Now I'm not going to go into the philosophy of whether or not free willI exists, only stating that it's not a scientific fact that it exists. So an AI might conclude it doesn't exist.

I am speaking about psychology, that is true, but doesn't social psychology come under the banner of psychology? I never mentioned psychiatry. As for easily falsifable claims. don't you mean easily disproved? I would define falsifiability as sceintific experimentation to prove that something is true, not to disprove it.

Do you know of Orch-OR, the Penrose/Hameroff theories? They have a different view of AI, have you read up on them?

Eagerly awaiting my AI Sex Slave (I mean AI Overlord).

Welcome, welcome! I am looking forward to hearing from you.

Big problems with this, even if we look past the silly complaining about "alarmists" etc.

You're making a common and simple mistake about moral theory; namely you're ignoring the issue that descriptive claims about morality are not the same as prescriptive claims about morality. The mere fact that morality functions as a method for people in society to cooperate with each other doesn't do anything to demonstrate that we are actually only obligated to cooperate with each other. What we're actually concerned with is what moral values people (or machines) should follow.

Your evasion of this issue is especially funny because of your claim to have the backing of "experts" on the subject - social psychologists? Sorry, the experts on the subject of morality are moral philosophers, and I can't think of any who would agree that the only thing of moral importance is just getting people to cooperate with each other. Don't we want more out of life than just to cooperate? What does "cooperate" even mean? Which moral transgressions do or don't count as violations of cooperation? What about animals and little children and people in comas and future generations and the legacies of the deceased - how do we cooperate with them? And people don't cooperate, so given that we're in a world without universal cooperation, what should we do? To what extent are we obliged to sacrifice our personal interests in the name of 'cooperation'? What about aesthetics? What about value theory? How is cooperation to even be measured and formalized? All big questions which need to be answered, and which can't be answered without addressing the work of actual moral philosophers (you know, the experts in the subject).

Now you don't like this, because it's a "rabbit hole", but morality isn't easy. (It is, after all, a hard problem.) That's the whole point. And rather than demonstrating that morality isn't a hard problem, you're saying that we can just lift a descriptive definition out of a dictionary, worship it like it's a categorical imperative and pretend like morality isn't a problem anymore.

Moreover, even if your avoidance of the distinction between prescriptive and descriptive morality were a good approach, you'd still be wrong. Human society is more complicated than evolutionary reciprocation and scenarios cut out of game theory textbooks. Morality is not merely a method of achieving cooperation, it's a source of power in society and a consequence of concrete social relations. Even if you want to restrict your domain to the descriptive side of morality, you will need to deal with tons of work in sociology and philosophy which shows how our moral views are influenced by our cultural and economic conditions and serve various roles in defining our roles in society.

This post has been linked to from another place on Steem.

About linkback bot. Please upvote if you like the bot and want to support its development.

Mark Waser knows I DO NOT agree with the opinion that there is a universal right and wrong which any group of experts can figure out. Right and wrong is inherently subjective because the consequences are subjective. Right for Alice could be wrong for Bob. It's only when right for Alice is also right for Bob that they can agree something is right. But it doesn't mean Gordon will agree with either of them, because right for him could be wrong for them both.

So the point? We have competing interests, and while you might be able to try to determine what is right for all life on earth, it is doubtful you will be able to do this without knowing the preferences of all life on earth. No group of humans currently has the information processing capability to understand the preferences of all humans, let alone all lifeforms on earth, to be able to determine what is best for life on earth.

And then what is best for life in the universe? There could be life on other places which aren't earth which have different interests. Should our AI be only concerned with earth? Even if you create a Gaia AI, it doesn't mean much when you don't know anything about what else is out there.

There are no moral experts

And that includes me. I don't believe I can determine what is best for anyone. I don't even claim to know what is best for myself. I accept my ignorance, and understand my ignorance is based on my very limited human ability to process information. The only way to reduce my ignorance to make better decisions is to enhance my ability to decide. This is why decision support is in my opinion of greater importance right now than to recklessly try to create AI when we don't even have an understanding of the big data we collected. We should probably figure out how to reduce ignorance before trying to make sense of AI ethics, and this means a cautious human centric approach to AI until we truly understand the consequences of our actions.