That is a good way to think about morals assuming they evolved from a survival utility point of view.

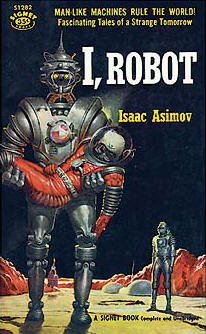

Asimov's Three Laws of Robotics assume that all properly engineered robots would have this set of morals encoded into their firmware:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

Of course, if humans were created, the definition of morals is "whatever their Creator approves of." From that point of view, morality is already encoded into our "firmware".

Indeed, when people who do not have the law, do by nature things required by the law, they are a law for themselves, even though they do not have the law. They show that the requirements of the law are written on their hearts, their consciences also bearing witness, and their thoughts sometimes accusing them and at other times even defending them. - Romans 2:14-15

Thank you for your feedback. I love Isaac Asimov, especially the Foundation series, I can't find sci-fi like that anymore.

The creator approach to morals unfortunately never seemed very self-consistent for me. First it poses the problem of an evolving morality in adjustment with the environment. Human morals have changed substantially over the centuries, those changes seem more likely to come from our own need for adaptation and our growing knowledge amassed by passing the experiences of parents to children that would ultimately culminate in culture and society.

Also, a creator that, like Asimov, would design rules by which his creations would operate, would seem to me foolish to then allow those creatures to break those same rules of their own free will.

There is an interesting story in 'I Robot' where he explored this inconsistency and an AI took actions to imprison all mankind as the only logical conclusion to avoid that any harm could come to them.

Most of the fun in artificial intelligence is not in programming a robot to do exactly what you want it to do. That's so 1950's. The fun is in creating autonomous systems and giving them the free will to interact with a complex environment.

If you want to evolve a physically well adapted species, you put them in an environment where there is the freedom and penalties necessary for physical evolution to occur.

If you want to evolve a spiritually well adapted species, you ...

Funny enough, AI was my specialisation in Computer Science. I like your perspective here Stan.

Genetic algorithms are nice to use when you are actually unable to encode the exact rules into your agent because they are so complex. So one basically just programs a bunch of possible behaviours and leave open their parameters to the GA forces of selection, mutation and crossover. Its not so much penalties that happen, more like maladaptions getting more and more pruned out.

This path would mean however that the 'moral rules' would be evolved and soft-coded, not pre-designed.

Perhaps. I'm just saying that in all of the 17 autonomous air, ground, sea, and space systems I've ever designed, there was some mix of hard-coded non-negotiable rules (like don't point your antenna away from earth) and other code designed to deal with uncertainties that couldn't be preprogrammed. My only point was to suggest we shouldn't be dogmatic about what a Creator would or wouldn't do.

If my Mars Rover could really think it would be probably asking, "Why did my creator put me in such a hostile environment?" I'd feel bad if my creation rejected me just because it couldn't figure out what I'm up to and evolved a set of morals that caused it to rebel. Come to think of it, that's might be the real reason why Viking 1 permanently cut off all communication by pointing its antenna somewhere else in a huff. :)