Inside Your Digital SLR PART- 3

Sensors Up Close

In the broadest terms, a digital camera sensor is a solid-state device that is sensitive to light. When photons are focused on the sensor by your dSLR’s lens, those photons are registered and, if enough accumulate, are translated into digital form to produce an image map you can view on your camera’s LCD and transfer to your computer for editing. Capturing the photons efficiently and accurately is the tricky part.

There’s a lot more to understand about sensors than the number of megapixels. There are very good reasons why one 15 megapixel sensor and its electronics produce merely good pictures, whereas a different sensor in the same resolution range is capable of sensational results. This section will help you understand why, and how you can use the underlying technology to improve your photos. Figure 1.4 shows a composite image that represents a typical dSLR sensor.

Figure= A digital SLR sensor looks something like this.

There are two main types of sensors used in digital cameras, called CCD (for charge coupled device) and CMOS (for complementary metal oxide semiconductor), and, fortunately, today there is little need to understand the technical differences between them, or, even which type of sensor resides in your camera. Early in the game, CCDs were the

imager of choice for high-quality image capture, while CMOS chips were the “cheapie” alternative used for less critical applications. Today, technology has advanced so that CMOS sensors have overcome virtually all the advantages CCD imagers formerly had, so that CMOS has become the dominant image capture device, with only a few cameras using CCDs remaining.

Capturing Light

Each sensor’s area is divided up into picture elements (pixels) in the form of individual photo-sensitive sites (photosites) arranged in an array of rows and columns. The number of rows and columns determines the resolution of the sensor. For example, a typical 21 megapixel digital camera might have 5,616 columns of pixels horizontally and 3,744 rows vertically. This produces 3:2 proportions (or aspect ratio) that allow printing an entire image on 6 inch × 4 inch (more typically called 4 × 6 inch) prints.

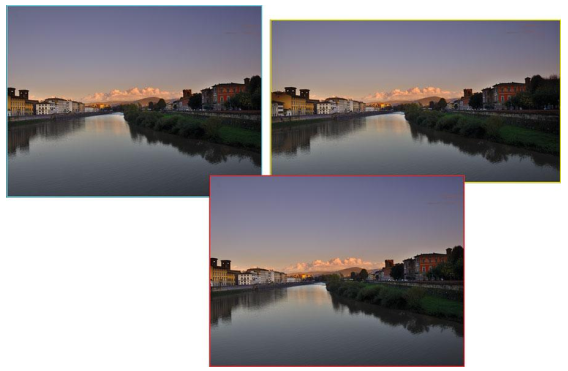

While a 3:2 aspect ratio is most common on digital SLRs, it is by no means the only one used. So-called Four Thirds cameras from vendors like Olympus and Panasonic, use a 4:3 aspect ratio; the Olympus E-5, for example, is a 12.3 megapixel camera with a sensor “laid out” in a 4032 × 3024 pixel array. Some dSLRs have a “high-definition”

crop mode for still photos that uses the same 16:9 aspect ratio as your HDTV. Figure 1.5 shows the difference between these different proportions.

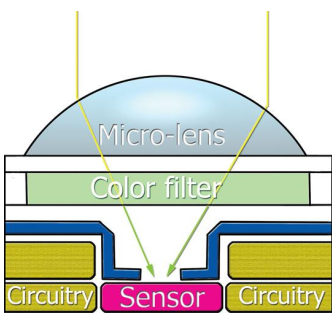

Digital camera sensors use microscopic lenses to focus incoming beams of photons onto the photosensitive areas on each individual pixel/photosite on the chip’s photo diode grid, as shown in Figure 1.6. The microlens performs two functions. First, it concentrates the incoming light onto the photosensitive area, which constitutes only a portion.

Figure = Typical sensor aspect ratios include 3:2 (upper left), 16:9 (upper right), and 4:3 (lower right).

Figure = A microlens on each photosite focuses incoming light onto the active area of the sensor.

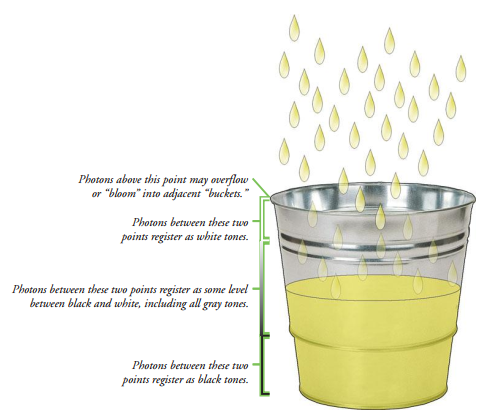

Figure = Think of a photo site as a bucket that fills up with photons.

of the photosite’s total area. (The rest of the area, in a CMOS sensor, is dedicated to circuitry that processes each pixel of the image individually. In a CCD, the sensitive area of individual photosites relative to the overall size of the photosite itself is larger.) In addition, the microlens corrects the relatively “steep” angle of incidence of incoming photons when the image is captured by lenses originally designed for film cameras.

Lenses designed specifically for digital cameras are built to focus the light from the edges of the lens on the photosite; older lenses may direct the light at such a steep angle that it strikes the “sides” of the photosite “bucket” instead of the active area of sensor itself.

As photons fall into this photosite “bucket,” the bucket fills. If, during the length of the exposure, the bucket receives a certain number of photons, called a threshold, then that pixel records an image at some value other than pure black. If too few pixels are captured, that pixel registers as black. The more photons that are grabbed, the lighter in color the pixel becomes, until they reach a certain level, at which point the pixel is deemed white (and no amount of additional pixels can make it “whiter”). In-between values produce shades of gray or, because of the filters used, various shades of red, green, and blue.

If too many pixels fall into a particular bucket, they may actually overflow, just as they might with a real bucket, and pour into the surrounding photosites, producing that unwanted flare known as blooming. The only way to prevent this photon overfilling is to drain off some of the extra photons before the photosite overflows, which is something that CMOS sensors, with individual circuits at each photosite, are able to do.

Of course, now you have a bucket full of photons, all mixed together in analog form like a bucket full of water. What you want though, in computer terms, is a bucket full of ice cubes, because individual cubes (digital values) are easier for a computer to manage than an amorphous mass of liquid. The first step is to convert the photons to something that can be handled electronically, namely electrons. The analog electron values in each row of a sensor array are converted from analog (“liquid”) form to digital (the “ice cubes”), either right at the individual photosites or through additional circuitry adjacent to the sensor itself.

Noise and Sensitivity

One undesirable effect common to all sensors is visual noise, that awful graininess that shows up as multicolored specks in images. In some ways, noise is like the excessive grain found in some high-speed photographic films. However, while photographic grain is sometimes used as a special effect, it’s rarely desirable in a digital photograph.

Unfortunately, there are several different kinds of noise, and several ways to produce it. The variety you are probably most familiar with is the kind introduced while the electrons are being processed, due to something called a “signal-to-noise” ratio. Analog signals are prone to this defect, as the amount of actual information available is obscured amidst all the background fuzz. When you’re listening to a CD in your car, and then roll down all the windows, you’re adding noise to the audio signal. Increasing the CD player’s volume may help a bit, but you’re still contending with an unfavorable signalto-noise ratio that probably mutes tones (especially higher treble notes) that you really wanted to hear. The same thing happens during long time exposures, or if you boost the ISO setting of your digital sensor. Longer exposures allow more photons to reach the sensor, increasing your ability to capture a picture under low-light conditions. However, the longer exposures also increase the likelihood that some pixels will register random phantom photons, often because the longer an imager is “hot” the warmer it gets, and that heat can be mistaken for photons. This kind of noise is called long exposure noise.

There’s a second type of noise, too, so-called high ISO noise. Increasing the ISO setting of your camera raises the threshold of sensitivity so that fewer and fewer photons are needed to register as an exposed pixel. Yet, that also increases the chances of one of those phantom photons being counted among the real-life light particles, too. The same thing happens when the analog signal is amplified: You’re increasing the image information in the signal but boosting the background fuzziness at the same time. Tune in a very faint or distant AM radio station on your car stereo. Then turn up the volume. After a certain point, turning up the volume further no longer helps you hear better. There’s a

similar point of diminishing returns for digital sensor ISO increases and signal amplification, as well.

Regardless of the source, it’s all noise to you, and you don’t want it. Digital SLRs have noise reduction features you can use to help minimize this problem.

Dynamic Range

The ability of a digital sensor to capture information over the whole range from darkest areas to lightest is called its dynamic range. You take many kinds of photos in which an extended dynamic range would be useful. Perhaps you have people dressed in dark clothing standing against a snowy background, or a sunset picture with important detail

in the foreground, or simply an image with important detail in the darkest shadow. Or, perhaps you are taking a walk in the woods and want to picture both some treetops that are brightly lit as well as the forest path that is cloaked in shadow, as in Figure 1.8. If your digital camera doesn’t have sufficient dynamic range or built-in HDR, you won’t

be able to capture detail in both areas in one shot without resorting to some tricks like Photoshop’s Merge to HDR Pro (High Dynamic Range) feature.

Sensors have some difficulty capturing the full range of tones that may be present in an image. Tones that are too dark won’t provide enough photons to register in the sensor’s photosite “buckets,” producing clipped shadows, unless you specify a lower threshold or amplify the signal, increasing noise.

Figure = A wide dynamic range allows you to capture the forest path and brightly lit canopy of leaves (left), whereas such a broad array of tones is beyond the capabilities of digital cameras with a narrower dynamic range (right).

Very light tones are likely to provide more photons than the bucket can hold, producing clipped highlights and overflowing to the adjacent photosites to generate blooming. Ideally, you want your sensor to be able to capture very subtle tonal gradations throughout the shadows, midtones, and highlight areas.