Facebook explains censorship policy for Live video

Facebook only removes content if it celebrates or glorifies violence, not if it’s only graphic or disturbing, according to a spokesperson.

Facebook also insists that the video of Philando Castile’s death was temporarily unavailable due to a technical glitch that was Facebook’s fault. That contradicts theories that the video disappeared due to Facebook waffling on whether it should stay up, a high volume of reports of it containing violent content, a deletion by police who’d taken possession of Castile’s girlfriend’s phone and Facebook account or a request from police to remove it.

However, Facebook refused to detail exactly what caused the glitch, such as a traffic spike. It did release this statement, however.

The temporary removal raised questions from BuzzFeed, TechCrunch and other outlets about Facebook’s roles and responsibilities for hosting citizen journalism that could be controversial or graphic.

Facebook’s graphic content censorship policy

I spoke at length with a Facebook spokesperson to get answers on its exact policy of its Community Standards regarding graphic content, and when violations lead to censorship. Though they refused to be quoted beyond an official statement, here’s what we’ve learned:

Facebook’s Community Standards outline what is and isn’t allowed on the social network, from pornography to violence to hate speech. They apply to Live video the same as to recorded photos and videos.

The policy on graphic content is that Facebook does not allow and will take down content depicting violence if it’s celebrated, glorified or mocks the victim. However, violent content that is graphic or disturbing is not a violation if it’s posted to bring attention to the violence or condemn it.

Essentially, if someone posts a graphically violent video saying “this is great, so and so got what was coming to them,” it will be removed, but if they say “This is terrible, these things need to stop,” it can remain visible.

Users can report any content, including Live videos in progress, as offensive for one of a variety of reasons, including that it depicts violence.

Even a single report flag sends the content to be reviewed by Facebook’s Community Standards team, which operates 24/7 worldwide. These team members can review content whether it’s public or privately shared. The volume of flags does not have bearing on whether content is or isn’t reviewed, and a higher number of flags will not trigger an automatic take-down.

There is no option to report content as “graphic but newsworthy,” or any other way to report that content could be disturbing and should be taken down. Instead, Facebook asks that users report the video as violent, or with any of the other options. It will then be reviewed by team members trained to determine whether the content violates Facebook’s standards.

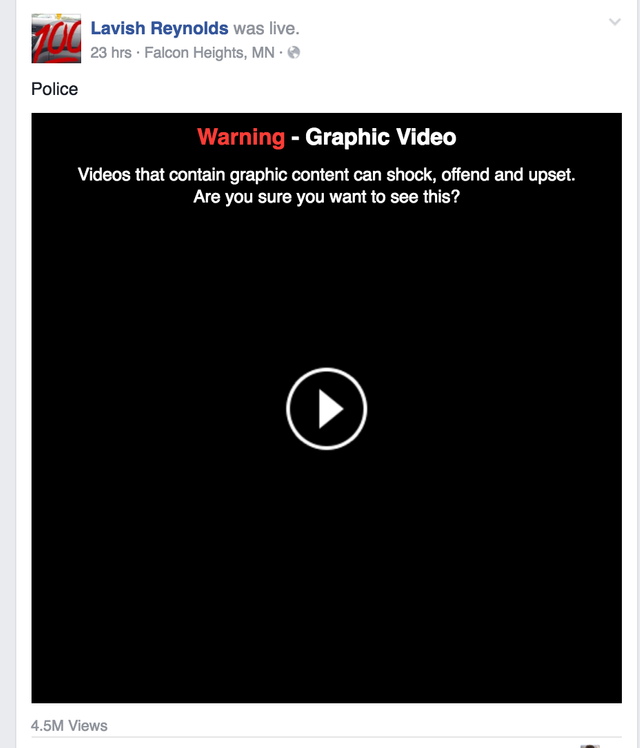

There are three possible outcomes to a review. 1) The content does not violate Facebook’s standards and is not considered graphic, and is left up as is. 2) The content violates Facebook’s standards and is taken down. 3) The content is deemed graphic or disturbing but not a violation, and is left up but with a disclaimer.

The black disclaimer screen hides the preview of the content and says “Warning – Graphic Video. Videos that contain graphic content can shock, offend, or upset. Are you sure you want to see this?” These videos do not auto-play in the News Feed, and are typically barred from being seen by users under 18.

Live videos can be reviewed while they’re still in progress if reported, and Facebook can interrupt and shut down the stream if it violates the standards. Facebook also monitors any public stream that reaches a high enough level of viewers.

If Facebook’s team believes a person depicted in shared content is a threat to themselves or others, it will contact local law enforcement. It will also encourage users flagging the content to contact the authorities.

Overall, these policies do not appear to be overly restrictive. Facebook’s censorship rules focus on the glorification of violence, such as videos posted to promote or celebrate terrorism.

The policy does not make distinctions about the cause of death, the relationship between the video’s creator and its subjects or the involvement of law enforcement. As with all content posted on Facebook, the creator retains ownership.

The future of citizen journalism

In the case of the Philando Castile video, Facebook says it’s aiming to balance awareness with the graphically violent nature of the content. The company tells me it understands the unique challenges of live video broadcasting and needs a responsible approach.

In a statement to TechCrunch the company says:

“We’re very sorry that the video was temporarily inaccessible. It was down due to a technical glitch, and restored as soon as we were able to investigate.

We can confirm it was streaming live on Facebook. A couple hours after, it was down for about an hour. The video doesn’t violate standards but we marked it as disturbing with a warning.”

The company suspiciously refused to detail the cause of the glitch, though a spike in traffic is a possibility. Still, that ambiguity stokes concerns that Facebook purposefully brought down the clip.

Even if it was a technical glitch, it’s one Facebook must prevent from happening in the future. Live is its chance to become a hub for real-time news that has historically ended up on Twitter first. And with the acquisition of Periscope, Twitter wants to control live video broadcasting, too. Users may reach for whichever they think is most likely to make their voice heard and not censor them.

Regarding Facebook and the future of citizen journalism, the company writes “Just as [Live video] gives us a window into the best moments in people’s lives, it can also let us bear witness to the worst. Live video can be a powerful tool in a crisis — to document events or ask for help.”

Facebook appears committed to hosting content that as Mark Zuckerberg says, can “shine a light” on injustice, even if it might shock people.

Calling 911 can’t bring the same transparency and reach to a situation that live video can. With 1.65 billion users, Facebook connects more of us than perhaps any other communication channel, and gives us a Live video camera to illuminate wrong-doing for the world to see.

That power and potential for profit comes with a responsibility not to shy away from controversy.

Upvoted you