The future of particle physics - from the Large Hadron Collider to future circular colliders

I like to dream about the future. Especially when I have the chance to participate to the discussions that will drive this future. Particle physicists are currently preparing the future of their research field, and important decisions will be taken in the next few years.

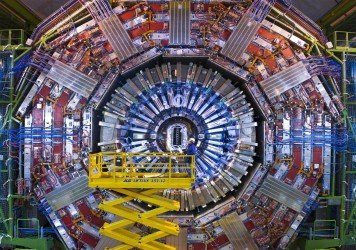

[image credits: CERN]

In this article, I will discuss one possible option for the next collider machine to be built, a very large circular collider that will have an energy 7 times larger than today’s best existing machine (the Large Hadron Collider at CERN), that is expected to record 10 times more data, and that may be 100km long.

THE LARGE HADRON COLLIDER - A RECAP

The Large Hadron Collider (or the LHC in short) is the largest particle collider ever built by humans and is currently operating at CERN, the largest physics laboratory in the world.

The high-energy physics community has today a wonderful machine allowing us to push forward the frontier of knowledge. The LHC is so far famous for its discovery of the Higgs boson (please have a look to my theory and experiment articles on this topic), the missing link of the Standard Model that evaded detection during many years until 2012.

And as a first lovely consequence of the Higgs discovery, a fresh Nobel Prize!

[image credits: CERN]

But the story was only starting in 2012 (the year in which the Higgs boson was discovered). The LHC machine is expected to run until 2035 and collect way more data compared with what has already been recorded.

In short, many options for new discoveries are open. Although Nature seems to be nasty with us and hide any new phenomenon from detection, there are many motivations to expect new phenomena (see the couple of posts on this topic that I have recently written, here and there).

We now need to wait (at least) until new results to be announced at the next Moriond conference in March 2017, which is the most important winter conference for particle physics.

INTERMEZZO - CHASING AMBULANCES

In the meantime, one of the funny thing that I can do as a theorist is to chase ambulances, or in other words not too significant excesses and deficits in data with respect to the expectation of the Standard Model.

[image credits: pixabay]

I have currently found a good one recently that seems to have been overlooked by everyone so far. More about it when the related (scientific, and maybe steemit) article will be out… No spoilers! ^^

Whether I believe in this ambulance or not is not the question. What makes it interesting is not the fact that the ambulance might be something real or not (this, we can learn in being patient enough).

The interesting question to ask is the following. If an excess or a deficit (a deviation in short) with respect to the Standard Model is real, are we well prepared to perform all measurements that may be necessary to understand what is going on?

And to do that, there is only one way.

First, we need to build one or more particle physics models that could explain the deviation. This can range from complicated to simple models.

We then need to check the predictions of such models and confront them to existing data. All data. This will imply constraints on the model parameters.

Finally, when we have found some surviving benchmark setups that accommodate both the deviation that we are chasing and all other existing (null) results, we can check how to further falsify the theory.

In this last step, it may turn out that some key predictions of the model are untested. We consequently add an item in the LHC search program. This is why chasing ambulances is in my opinion useful.

THE NOT TOO FAR FUTURE IS TODAY

It is now time to go back to the topic. Approximating all dates, the LHC first studies date from 1985, the machine has started in 2005 and will operate until 2035.

To recap this timeframe, we needed 20 years to understand what could be done in terms of physics, decide that this was useful for high-energy physics, design and finally build the machine and the associated experiments. And of course, to finally start the whole thing.

This 20-years period is then followed by 30 years of physics and data taking.

The LHC will stop in 2035 and it is important to be ready to start a new series of experiments right after this.

Based on history, we may need about 20 years to investigate the potential of such a next machine and also, what this machine could be.

Today is thus the right period to think about the future!!!

This is why physicists are thinking about ideas of future experiments that could be built, in particular at CERN but potentially elsewhere a well. And for each idea, we need to think about the physics potential, and the costs for the society (note that there will be in principle a benefit given back to the society, as for the LHC](https://steemit.com/science/@lemouth/the-cern-large-hadron-collider-and-its-economical-impact-on-the-society)).

A FUTURE CIRCULAR COLLIDER?

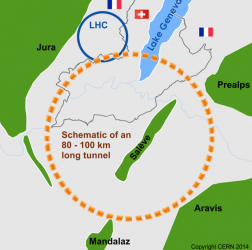

[image credits: CERN]

There are many options for a future machine on the menu, and one I particularly like consists of a future circular hadron collider. To describe it shortly, this is nothing but a VLHC, a Very Large Hadron Collider. We can take the LHC and multiply the entire setup by a not so small scaling factor (check out the map).

Such a concept is actually abbreviated as an FCC, or a Future Circular Collider. More information can be found on this CERN website or this IHEP website, the former website being the CERN option and the latter website being the corresponding Chinese option.

But why is such a machine useful? We have three case stories depending on what will be found or what will not be found at the LHC.

First, the LHC may observe something new. We will try to characterize what this thing is via several physics theories. Most new physics theories however predict a full spectra of new particles to observe with many of them possibly out of the LHC reach. For this reason, a more powerful machine will be needed.

Second, one may imagine that the LHC will observe deviations from the Standard Model, but not any new particle itself. We have actually here an indirect effect of a new particle. By studying it, we can derive the mass scale at which the new particles that generated the effect lie. And to get there, there will be only one way: a new more powerful machine.

Finally, we may be unlucky and just find nothing at the LHC. Therefore, the only way to find a new phenomenon will be to measure all the Standard Model observables as precisely as possible. In particular (taking one example among many), all Higgs-boson self interactions should be measured: The trilinear one (an interaction between three Higgs bosons) being roughly extractable from LHC data and the quartic one (an interaction between four Higgs bosons) being out of reach. We therefore need a more powerful machine to do that.

In short, we will need a more powerful machine to investigate further all possible findings, or non-findings, of the LHC.

CONCLUSIONS - GETTING PREPARED

Physicists have started a couple of years ago to evaluate the physics potential of such a very large circular collider.

We are talking about a 100km long collider, to be built either at CERN or in China (both have plans with this respect, although it is clear that only one collider will be built). The energy of such a machine will be 7 times the one of the LHC and the experiments to be built along this machine will be expected to record a very large amount of data (10 times more than the LHC).

This implies many challenges!

[image credits: the particle zoo]

I am working on the capacities to precisely measure the quartic Higgs interaction strength at this future collider, and it turns out that this will be extremely difficult (as collaborators and I have shown last year), but actually feasible (as the same collaborators and I will show next month).

On the other hand, experimental colleagues have started R&D developments concerning the detectors that should be built, their features, etc, as well as the IT people have started to think about managing data.

We move on on all aspects, in order to be ready for 2018, the year where the European strategy for particle physics will be updated.

In the meantime, please stay tuned!

What do you think the chances of this new collider being built if case 3 happens and the LHC does not find anything? I will be starting my PhD in March but decided to go into quantum computing instead of experimental particle physics for fear of this happening.

In terms of physics, case 3 is still very exciting. This may implies that we will need new guiding principles for building new physics theories. That's very exciting as such.

Unfortunately, this may be harder on the political level. As this is were we need to go to have the next generation of colliders been built. I honestly do not know the possible outcome. And I can only hope. I am still too young to be part of those who will convince the decision-makers. I can only watch (and study physics ^^ ).

To jump towards the second item of your message, particle physics is a tough field (although I don't know whether it is tougher than other fields. Probably not.). After the PhD, our guys usually go through postdocs and postdocs before possibly dreaming of getting a job. And here, less than 1% of the applicants will actually get something.

However, having a PhD in physics, spending some time here and there as a postdoc also bring experiences. A PhD teaches you how to solve complicated problems, persevere to solve them, etc... Some experience also searched for by private companies. So that I believe that it is not a loss of time. And you also participate to something big. Very big. And this is cool!

In any case, good luck with your career! Quantum computing is also a very exciting field!

VLHC needs a fancy name I think! How about Valhalla Collider?

Haha! That's a good one. AT least way more sexy than FCC :D

I will definitely reuse it for my next (informal) talk! ^^

You should. Maybe it will stick!

And I will definitely cite you ^^

:)

Very Large Hadron Colider, it's indeed significantly larger looks about 1 order of magnitude by your image.

In terms of circumferences, we are only upgrading from 27km to 100 km. Only a factor of 3 :)

How does this extra distance allow for seeing things not possible with the smaller LHC? This is probably a really dumb question... Is it just because greater velocities can be achieved?

The extra distance allows us to accelerate the particles further.

The LHC will act as a pre-accelerator and the beam will then be injected in the new ring where the kinetic energy of the particle is expected to be multiplied by 7.

If this new ring is ever built of course (let's hope) :)

Just for my curiosity: how many data you expect/estimate from the next generation collider , let's say the most generating experiment? Just the order of magnitude.

We are measuring data in inverse barns. For the LHC, we expect 3 inverse ab (attobarns) at the end of the run (per experiment). Here, the goal is 30 inverse ab. Exactly a factor of 10.

Let me try to estimate... (sorry for being curious on computing part) the Tier 0 will receive ~290TB of data a day ( and tier 1 will stay around 29TB /day after filtering), or the experiment is more diluted in time, like taking more time to execute? I am trying to compare with the ALICE experiment (which generated ~4Gb/s If I'm not wrong)

There are researchers investigating this questions (I have only barely followed that development so that I don't have many details to share).

There will be way to much collisions to record them all and one needs to design strategies to decide which one to record and which one to ignore. This needs to make assumptions on the technology that will be available in 20 years. Using Moore's law with respect to what is available today, everything should be fine (I remember this from a talk I attended 2 years ago). All interesting processes (with electroweak rates or less) could be recorded.

I may however need to update myself on the topic. I don't remember having noticed this being discussed at the last FCC workshop at CERN, which may mean this is actually not a problem.

I was not saying it was a problem. I 've read many documents the LHC team released about its architecture, the LHC Grid. It is quite a "uncommon" architecture, but of course I understand you need it.

I was asking a comparison with ALICE because this experiment generated an amount of data which is a well known case study (also) in my sector:

http://aliceinfo.cern.ch/Public/en/Chapter2/Chap2_DAQ.html

Again, I didn't say it is a problem: sure it is a challenge. Very interesting, btw. I like the idea to filter data on the Tier 0 and then trasmit to the Tier 1 to reconstruct. Quite unusual approach, but interesting.

Sorry for the late reply. I am mostly not in front of the computer/cell-phone during the week-end (I am already spending more than 70 hours in front of them during the week :p )

Double sorry for having ignored the ALICE comparison. I just forgot to comment... thanks for insisting. Just to start with, what is said below probably holds for all LHC experiments. Then, just a disclaimer. I want to recall that I am a theorist, so that I will try to answer as good as I can. But this level of technicality is not directly connected to what I do.

The triggers are the key. Not all collisions will be recorded and decisions are made so that only interesting collisions will be recorded and that should satisfy some electronic constraints. The challenges were, at the time the experiment was designed, to be able to have good triggers than guarantee that all interesting events would be recorded, and that the data acquisition system was good enough to follow the rhythm. I think the the challenges have been successfully achieved.

Note that in this chain, you also have Tier 2 and Tier 3 machines so that the analysis work is done on the lower machines that can request some part of the data with some filters.

I hope all of this helps. Do not hesitate to continue the discussion ^^

Don't worry, I was just discussing for my curiosity, so you don't need to apologize for late answer. I am that curious because I work in the private sector, nevertheless your "big data" is a use case , so this is why I ask.

Of course I have the IT perspective, to me the sensors you are using are real-time (=means they cannot wait if the peer is slow) so the challenge is "capacity". But you say the data flow is the same as ALICE, so I think this is because you filter the data using "triggers". Which makes it interesting.

Basically I'm into SOM Kohonen networks and derivatives: self-learning AI which can divide data in "similar" set of data. Be example, if you put economic/demographic/social data of countries , and you ask to put the similar ones with the same color, you see this:

so basically, your "triggers" , which are able to decide what to keep, are very interesting. Ok , you only have 2 categories (to keep and not to keep) , while a SOM may decide how many categories, but still the problem is the same. To put apples with apples and oranges with oranges, without being able to predict which kind of fruit you're going to receive.

I will keep looking for documentation, thank you for your time, your explanations about particle physics are interesting.

I think we should stop messing around with things we don't know. The repercussions of this are unknown and i think we have already caused enough damage. Just my honest opinion though.

We would have never discovered anything by never trying to understand the unknown. Here, I am however a bit puzzled. Which damage has the LHC done? None to my knowledge. For the next generation of colliders, which damage do you expect us to do?

I just think that physics and science are going a little too far and playing God. Surely you have heard of HARP, smart dust, chemtrails to name a few. Yes, not in the same category as the collider, but definitely an invention from the same scientists who created the aforementioned. Again, this is my opinion. But there is enough evidence out there to prove that whatever man makes is most of the time used for power and control (too bad they don't realize this is simply an illusion). I am not a fan of man-made inventions especially when they try to take on the role they are not supposed to . Man by nature is destructive, abusive and a narcissist. But hey, that's my opinion. Don't get me wrong, science does have its plus side, but unfortunately we abuse our freedoms. Cheers.

One must be careful not to mix everything. Science is composed of many fields. I can only talk to what I know: particle physics / high-energy physics. For other branches of science (which are the ones you refer too), I do not know what is going on from the inside, as I am as an outsider as you are.

The purpose of all high-energy physics experiments is to understand deeply how nature works. Nothing more nothing less. I honestly do not understand what "playing god" means (to me it means nothing), in particular in the high-energy physics context. There is no power or control to take from anyone. Just trying, with the humans means we have, to understand how the dynamics of our universe. This is by the way what science has always done, in a broad extent.

Note that we also perfectly understand how our machines work. Believe me, this is safe.

Well from your perspective as a physicist i am in agreement with you. But unfortunately the world is not the one you know.. The real world is made of people and entities that take this kind of technology and power and use it for no good. This is the one i am talking about. As you know there are a myriad of examples as to why we should not be messing with our world. I don't believe it is up to us to figure this out as we are merely here as visitors, and what we learn here is irrelevant in the spirit of things. But again, i guess from your perspective, this means nothing. The internet itself is safe as well, but its application allows for more harm than good to be done, imagine a collider. Not trying to discredit anything, but again, humans are destructive and arrogant by nature. Cheers

How much you know of the "real world"? How many countries you have traveled? How many people you know , personally? How far you have gone from home, for more than 3 months in a row? I am asking because, very often, when people says "real world" they mean "real TV in my living room".