How close are we to true AI?

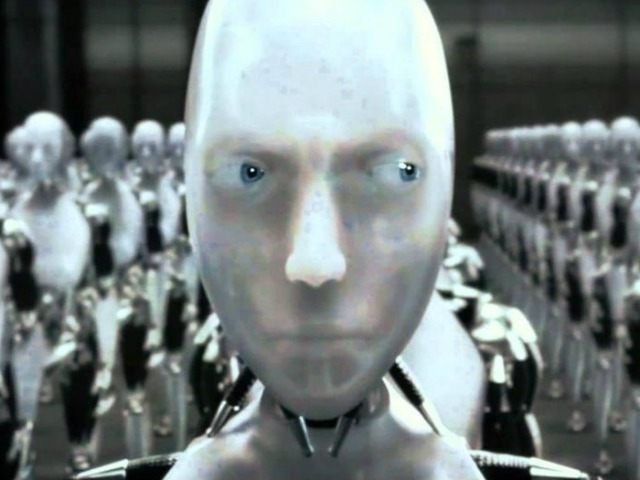

AI is developing fast, today, we are closer to true AI then we have ever been before. And although most of us regard films like ‘Terminator’ and ‘I, Robot’ as fantasy, perhaps they are more real than we think…

Recently, Elon Musk warned the world again about the dangers of AI, Musk has stated many times that the world of AI needs regulation, just like with cars and planes, a view that many renowned scientists and public figures share. And although the tweet may be part satire, is there some truth behind it?

To judge this question, we need to define at what point AI becomes a danger to society, many people understand this as the point where AI is able to make decisions for itself, with no prior influence. The ability to feel. But how do we judge this? By using IQ tests. IQ tests were formulated as a method of quantifying intelligence, although their record in this regard is often disputed. Computers can do IQ tests – with around the same ability level as a four-year-old. But there are many other factors which go into true artificial intelligence that IQ tests do not even touch on.

Emotional intelligence registers how well someone is able to understand and interact with people on an emotional level, or interpret their own feelings. This is sometimes through of as empathy as something which is intuitive but is undoubtedly a mental process, dependent on our brain’s ability to analyse information and infer an insight or solution, so qualifies as “intelligence”. This aspect of our intelligence is thought to be integral to our creative abilities – something else machines will have to master if they are going to develop “human-like” intelligence. Steps are certainly being taken in this direction. IBM’s Watson Music can create music designed to mimic the feelings and emotions conveyed by a different piece of music. AIs have also written poetry and novels.

Athletes and sportspeople rely on a spatial awareness of what is going on around them as well as needing their brain to work quickly and accurately to react to complicated, changing circumstances. Again these are processes carried out in the brain, even though the qualities we most often associate with athletes are physical. AIs have been taught to play old video games using only visual input, showing that they are capable of “learning” how to react to movement and even developing a desire to win.

Our communicative ability – how well we are able to express our ideas and pass the valuable information we interpret from the world around us is another factor of intelligence. Again, machines have made ground here, with recent developments in the AI-related fields of Natural Language Processing and Natural Language Generation, which are bringing them closer to being able to communicate with us in a human-like way.

If an AI was to display all of these abilities, then perhaps they would be considered to have human-like if not better than human intelligence, and although it is unlikely we will wake up tomorrow with robots running the world, maybe one day, this could be a grim reality.

Nice writeup miloburne, keep it up! I'm happy to read more about the AI and deep learning!

Using humans as the Gold standard for intelligence shows a lack of ambition. Though, how do we test intelligences that don't need to communicate with humans via language or cultural artefacts. I dunno.

I'm familiar with a number of state-of-the-art machine learning algorithms, and can safely report that none of them even remotely approach the idea of human sentience. None of these algorithms give a machine the ability to develop a sense of self, or to answer questions about why it made a particular decision, or to grasp the real world consequences of its decisions.

One huge stumbling block with today's AI tech is learning speed. Efforts to speed up batch gradient descent are coming up somewhat short. You have to show a convolutional neural network something like 5,000 images of dogs (as well as 5,000 images of things that are not dogs) before the machine learns how to classify images as to whether they depict a dog. Yet, a small human child can learn the task after viewing one image. Not only that, but the child can immediately identify a puppy, even after never seeing one before.

To be honest, some in the industry view recent warnings from Musk as an attempt to create and gain control over regulatory laws restricting small AI shops, mostly as an anti-competitive barrier to trade. Think about it. If Musk scares enough people, they'll put him in charge, and then he'll get to write the rules (which, of course, will benefit his company). When reading predictions of doom and gloom, it's best to exercise critical thinking and demand proof.

@miloburne Well done for sticking at it! Followed.