Steem Pressure #2 - toys for boys and girls

So you want to play with Steem?

Let's prepare a proper environment for our playground.

First, the hardware.

If you’ve read the previous episode of Steem Pressure, you know that Steem is growing (and that’s awesome!). Today, we’ll choose proper hardware to run our nodes.

Network

A low-latency network with at least 100Mbps bandwidth in both directions is a recommended minimum, even if you are running “just” a seed node.

Please note that it is not enough to have a fast network card or an ISP that claims to provide 1Gbps connection. You need a decent network connectivity across the globe.

CPU

Virtually any modern, x86-64 CPU with at least 2 cores will be ok.

Currently, you will not benefit from multiple cores as much as from a faster single-core performance.

Most likely a CPU with 4 cores at 3GHz will perform better than a CPU with 8 cores at 2GHz.

RAM

A good choice for a consensus node is 24-32GB of RAM.

For a full node, it is 128-256GB of RAM.

Of course, if you can afford to put everything in RAM, just do it.

Storage backend

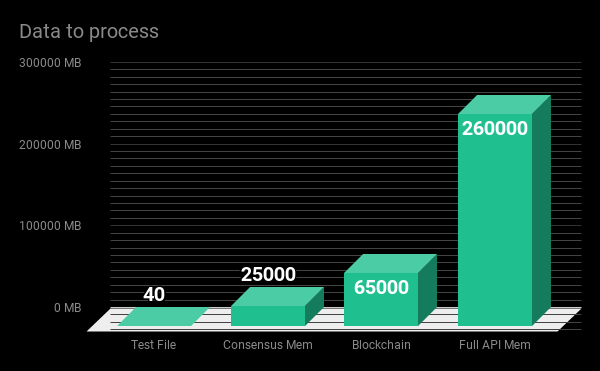

Size

Currently, we need over 65GB of data to hold the block_log file itself.

Additionally, you’ll need over 25GB to keep shared_memory.bin for the simplest node, up to 260GB for a fully featured API node with all the bells and whistles.

So I would say for now, it’s 100GB for a consensus node and 400GB for a full node.

That’s true at the time I’m writing this post, so by the time you read it, these parameters may no longer be enough.

Performance

Your storage backend needs to be not only large enough to hold your data but also fast enough to deliver data on time. If your node runs out of RAM and starts using swap (in case of tmpfs setup) or if it runs out of buffers/cache, the workload will put a lot of strain on your storage. You need to make sure that it can handle a lot of random reads and writes with minimum latency. Low latency is the key. That renders most of HDD setups unusable.

Trivial benchmark

Run dd if=/dev/zero of=tst.tmp bs=4k count=10k oflag=dsync in a directory where you are going to keep your shared_memory.bin file.

It will be either the value of shared-file-dir, or if undefined, the value of data-dir (-d)

The result will be a very rough guesstimate on how well your storage backend will be able to handle workload caused by steemd accessing its shared memory file.

Is your storage backend fast enough?

Check how long it will take to complete that command. Less is better.

- Less than 1 second is perfect.

- Up to 10 seconds is good.

- Up to 30 seconds is so-so.

- Up to 60 seconds is uh-oh.

- Anything more is a no-no.

Of course, the answer is not definitive, accurate, or even always true ;-)

Even if it is a minute or two, you still can play with your steemd toy without a purpose and waste your time.

Or maybe you should do something more productive and use your disks to play the “Imperial March” instead?

(Shoutout to @lordvader)

Oh, and by the way:

Blindly copying & pasting snippets is dangerous.

DO NOT EVER RUN ANYTHING YOU DON’T FULLY UNDERSTAND

So what does that command do?

It writes zeros to a temporary file in 4096-byte blocks. It does that ten thousand times, each time synchronizing I/O to get caching out of the equation.

Usually your drive, even if it is an old HDD, can write large chunks of data in bursts very effectively, but that’s not what will be happening with your shared_memory.bin file.

That command writes only 40MB of data.

Shared memory file for a consensus node is 600x bigger than that.

Oh, and that’s not all.

The shared memory file for a full API node is 10x bigger than the one for the consensus node.

Dedicated or virtual?

It doesn’t matter, but keep in mind that high quality does.

With VPS it’s more tricky because it’s usually hard to tell what real performance you can expect, how resources are shared, how much baseline resources are guaranteed, if the “SSD” drives are local or network attached, what your I/O limits are, etc.

What’s next?

In the next episode, I will show you step by step how to set up a machine from scratch for a basic consensus node, how to configure it, and how it performs in the real battlefield environment.

If you believe I can be of value to Steem, please vote for me (gtg) as a witness on Steemit's Witnesses List or set (gtg) as a proxy that will vote for witnesses for you.

Your vote does matter!

You can contact me directly on steemit.chat, as Gandalf

Steem On

I remember when I first got my interest in Steemit witnesses. Not running one myself though, as it was something completely outside of my knowledge and skills. I noticed how quite many witnesses at least at that time seemed to have low end hardware for witnesses.

In general, I think people who try to run Steemit witness with as little as possible are not showing they care for the platform enough.

Very true. I hope that my series would help to improve the quality of various nodes across our platform, including witness nodes. There are many people around that treat witness position as a classical PoW mining: fire and forget. It's so wrong. But it's not their fault. It's ours if we vote for them.

Well I can imagine it's same with witness votes for most people: vote and forget.

Users (including me) have kept some witnesses with the votes just as long the limit of 30 votes is not full yet.

For some, it seems that's the first moment when they even consider removing some votes from other witnesses.

But thank you, I appreciate the post series already.

Luckily neither you or I need to run a full Steem node then :)

I am pleased by your decision to give me one of your inhabitant's "shout outs". It is further evidence that you are indeed one of the wisest of your planet's inhabitants. There will be a place for you when the Empire arrives next week.

Dennis! Get in here! How do I edit out that last par...

Well I don't understand much of this at all but that video was AWESOME!

Also of curse I have been voting for you as witness for over a year and a half!

SteemOn Gandalf~*~

Thank you for your support! :-)

No, really thank you. You deserve the support for witness you have done a lot for this community and thats just a little bit of what I even know about. Also you are a kind and good person in RL on top of your contributions to this platform.

Glad to know you and have you as part of the community!

Upvote and resteem. GTG, almost a post which I can practically make use of. Keep on trying!

Thank you :-)

Keep up the good work!

Hi @gtg, I am a relative newbie to Steemit, but already I use a lot of the tools that you have created and I find them extremely useful. Firstly I just want to say thank you to your commitment to such a great and revolutionary platform. I am fairly technical and work in the ICT space, but as a new user of the platform, getting your head around all the 'steem tools' available is a very steep learning curve and as you say they all offer value, but they can definitely do with some refinement and focus which will help the less technical users as they continue to come onto the platform.

Thank you for noticing my blog, it means a lot to me.

I voted you as a witness, @surpassinggoogle told me that you have done a lot for the community so thank you so much!

Thank you :-)

Gtg, Thank u for this amazing tutorial! I am really looking forward to see your next episode! Do u know what I really like about this post!? Your also explain people that they should never run smth they do not understand! Running smth u do not understand can be really bad for u.... Thank u one more time!

Ps: Może cholerka dodam, że nie zrozumiałam aż tak wiele, lecz próbuję :D

True, that part is valid in various scenarios, not only those related to Steem.

I'm glad you like it. :-)

Tak widziałam, że Paulinka jakoś próbuję też zrozumieć tą czarną magię ;D Nie chcę byc gorsza. Pozdrawiam @gtg @highonthehog

upvoted. and i have voted you as my witness...

Thank you :-)

Thanks for the info, agreed, if you don’t what the snippet does, first find out.

a very curious article. Thank you very much for the information. very useful information.