[EN]How do self-driving cars work ? [How will we move tomorrow? #1]

Hi everyone!

Today I am introducing you the series of articles about innovations and projects in mobility and transport. This series will include several articles (between 3 and 5 normally) describing different projects and innovations to improve the way we travel.

The first innovation I'd like to talk to you about, which is probably the most obvious: self-driving cars.

But before I start, I would like to thank you for the wonderful welcome you gave me at my first article, I didn't expect that at all, thank you very much! And thanks to @steemSTEM for their support and @ixindamix for allowing me to appear in an article of @ocd:)

What is it? And why does it exist?

Autonomous cars are vehicles capable of travelling on the road network without human intervention, without drivers. Comfort, the autonomy of people unable to drive and safety are the arguments put forward by companies in the sector. Autonomous cars should drastically reduce the number of accidents related to human factors (accidents caused by drowsiness, alcohol, excessive speed or distractions such as the telephone). These accidents represent more than 90% of the causes of accidents in France (according to ONISR) in 2014 and in the USA (according to NHTSA) in 2016.

How does it work?

To describe the functioning of a self-contained car, I will use as an example those of Waymo which is a division of Google's parent company, Alphabet Inc, because they have already covered more than 6 million km with about one disengagement (the moment the driver takes over the steering wheel) all the ~8000 km (approximately two Toronto-Los Angeles trip) in 2016 according to Waymo and which they communicate frequently.

When a company wants to create an autonomous car, it usually has 3 choices (in addition to simulation):

- Add their equipment to a car mass produced by an automobile manufacturer after leaving the factory.

- Acting on the assembly line of a mass-produced car to change certain parts of the design and put their material in it.

- Create their own car model (or at least a prototype)

As for Waymo, they started in 2009 with Toyota Prius and Lexus RX450h on which they added their equipment after leaving the factory.

Then they created their own prototype car entirely designed to be a self-driving car, the Firefly (you can learn more about its design thanks to this article:

And since 2017 Waymo has been working with Chrysler and has been using modified versions of the Chrysler Pacifica hybrid minivan, whose design has been slightly modified specifically for autonomous driving and to carry all the necessary equipment.

The sensors:

Now that we see what an autonomous car looks like, let's see how it works. The first thing I'm going to tell you about is an element that can be found on all autonomous cars but is very apparent at Waymo, I want to talk about this thing:

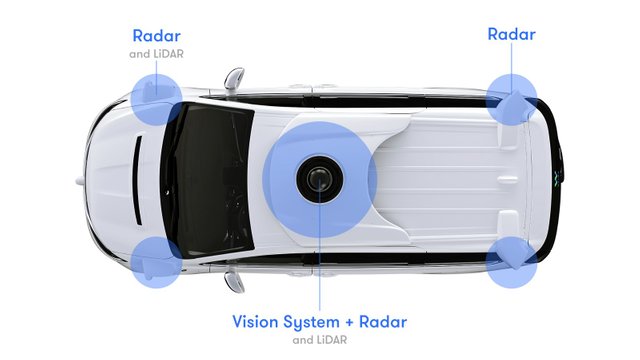

This thing is a set of sensors composed of a LiDAR (Light Detection and Ranging), a RADAR (Radar Detection and Ranging) and a vision system (a 360° camera). It is surely the most important and most expensive sensors (a single set that can reach $75 000 according to Waymo) that autonomous cars possess because it is this one that allows the "car to see". Indeed the combination of these 3 sensors makes it possible to detect almost any object near or far (Waymo believes that the latest generation is able to detect objects at a distance of 2 football terrains, that is 220m). There are 4 other RADARs and/or LIDARs on the minivan to cover the blind spots of the sensors on the roof. They're distributed like this:

Why would they use different sensors?

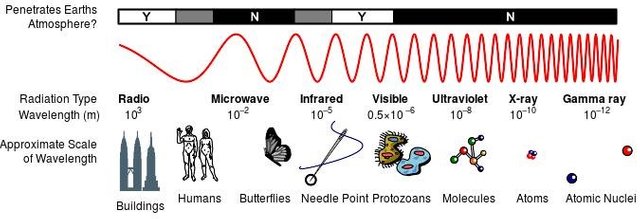

The RADARs and LIDARs work on the same principle, we send a signal of a certain wavelength and we measure the time it takes to return, we obtain in this way the distance that the wave travelled knowing its speed (we will talk in a future article about distance sensors). But they also have a common weakness: they can both be disturbed if the transmitter of another sensor uses the same wavelength.

The difference lies in the wavelength used, for LIDARs it belongs to the infrared range mostly (between 1500 and 2000 nm i. e. about 10^-6 m, see spectrum above) this means that LIDARs are very precise and can detect small objects but in return, LIDARs are very easily disturbed by changes in luminosity.

It is here that the radars come, it is necessary a sensor which comes to fill the defects of the LIDAR, thanks to its long wavelength (domain of radio waves) the radar is able to detect more distant objects but cannot detect small objects and they are not as precise as LIDARs.

The camera, on the other hand, is intended to help identify objects around the car, such as signs, types of cars (police, fire brigade,...).

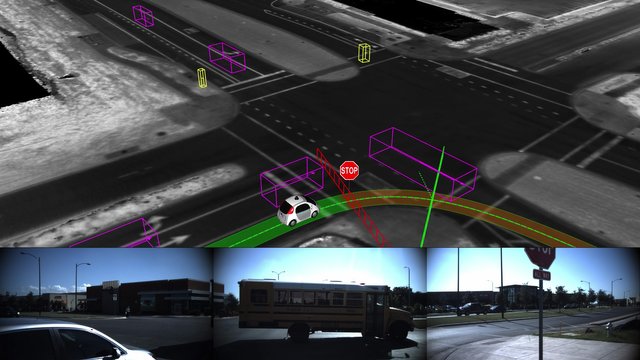

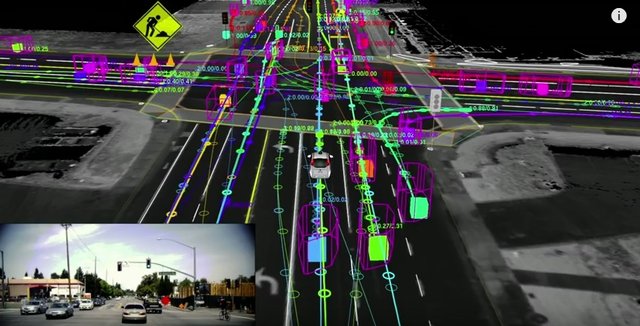

The data from these three types of sensors, combined with a roadmap and a location system that can do without GPS in the event of signal loss (presumably thanks to a system similar to odometry, measuring wheel rotation) make it possible to obtain a representation of the reality that looks like this:

This is the one that will allow the computer to predict the movements of the various objects and thus make decisions on the actions to be executed. Once the trajectories are calculated, they can appear on this representation but it becomes unreadable for us (and each of these predictions is updated several times per second):

The decision-making process:

What I call decision making processes here is the "Process" part of an information chain (an information chain is a way of representing the functions of a robot in the form of 3 of them: Acquire (everything related to sensor reading), Process, and Communicate (corresponds here to define the speed and orientation of the wheels for example).

I found very little information on the exact functioning of their algorithms but here is the order in which I think the different actions take place:

- The algorithm recovers a sequence of data provided by the different sensors (the last X seconds for example)

- Then we will try to predict the trajectories of the different objects based on their previous positions, the type of objects,...

- The algorithm will compare the trajectories to one of the cars and look for road signs, all types of signs (plots, lines on the ground, red lights, the arm of a cyclist wanting to turn, a policeman who makes the traffic,...).

- The algorithm will then order the car to accelerate, brake, turn or change nothing.

- The cycle starts again several times a second.

Of course, this version is "very simplified" but you can learn to make it even simpler than this one on Siraj Raval's Youtube channel by watching this video or this one if you know a little bit about Python. In these videos, Siraj uses artificial intelligence to control the car, and it is likely that Waymo uses several to run their car.

Indeed, just the recognition of objects and road signs requires an artificial intelligence to work, we can easily suspect the Deep Learning to be involved in this task knowing the different Google projects, I am thinking in particular of TensorFlow, which once training with a dataset of images can recognize different objects. This theory is supported by the fact that the new captchas (the Turing tests to distinguish humans and robots during registration on a website) ask you to label (associate a name with an object contained in an image) images of cars and road signs currently. This technique had already been used for the digitization of books a few years ago.

If you want to test the image recognition of TensorFlow there is ademo application available on Android which we will talk about later. :)

The dilemmas:

Many people are sceptical because of the decisions that an autonomous car could make in case of a dilemma, the typical problem is this:

The autonomous car arrives at a pedestrian crossing, a child crosses while the pedestrian light is red, the distance is not long enough to stop and the only exit available is a sidewalk on which a grandmother walks. In both cases the outcome is fatal.

What should the car do? Kill the child or grandmother?**

MIT took the problem further by proposing an ethical survey with different situations to see the choices that most people would make, the choices you would make in those situations.

But this type of choice may not be the right question, if we have autonomous cars, why not let them communicate with urban equipment (lights among others) as well as with other cars (allowing a car to signal to others a potential danger). Chris Urmson (former leader of the Alphabet Autonomous Car Project) answered this question at the [end of his conference](

This kind of problem is not ignored by Waymo who trains his car to know how to react to as many situations as possible and to behave safely when it encounters a new situation, as shown in this excerpt from a talk given by Chris Urmson in 2015 (starting at 11:1):

Simulation and use of video games:

In addition to their fleet of vehicles that run on the roads every day Waymo continuously simulates 25,000 cars that drive millions of miles every day to train their algorithms and observe the different malfunctions. And in writing this article I had an idea (I don't know if it was already in place before) of a way to test the behaviour of autonomous cars in front of road users. Using an online video game in which players interact with autonomous cars. Players could travel on foot, in cars or any other vehicles that a self-driving car might actually run into, and developers could study the reactions of their cars depending on the players' behaviour. Using games for a scientific purpose has already been used several times and has proven itself, so why not use it here?

When will it be available?

It is difficult to answer this question because, on the one hand, there are still some challenges to be overcome regarding how cars react to unknown situations (or changes in weather conditions) and the car must be as safe as possible. Moreover, there is no clear legislation on liability in the event of a "culpable" accident, is it the fault of the manufacturer, the company that developed the software or the faulty sensor, the owner of the car? The most optimistic hope to reach the first customers by 2020-2025.

At the same time, companies trying to intuitively communicate the intentions of the autonomous car to other road users using light strips:

In addition to Waymo, there are other interesting projects around autonomous cars such as the Otto car (owned by UBER) or the Renault Symbioz concept car, but there are many others.

Next, a project to increase traffic flow in the city:

And to finish this article I wanted to present you a project in which I believe a lot: Next. Created in Italy by 2 researchers, this project proposes autonomous and modular pods that you can order from your phone to go wherever you want. It is the perfect combination of public transport and cars or taxis/UBER. And if you look at the articles that talk about them, I'm not alone in believing it.

How does it work?

What's the point? Why is that useful?

In addition to being able to reduce the number of accidents through autonomous pod driving, they will be able to reduce the traffic jams present in the city centres (according to Le Parisien, Parisian lose 90 hours a year in traffic congestion, information confirmed by [V-Traffic](http://mediamobile.].com/pdf/V-Traffic_Etude2016_IDF_FINAL-web. pdf) of which one of the causes is cars with only one or two people on board (according to a survey [in London in 2011 (see page 13] (https://londondatastore-upload.)s3. amazonaws. com/Zho%3Dttw-flows. pdf) more than one million people drive to go to work while 64,000 are passengers). Next will optimize the number of people per pod (each person can carry up to 6 seats and 4 standing) as well as the journeys made by them by grouping people going to the same place. In addition, compared to buses, travel times are shortened as there is no need to stop to change pods or wait for the next stop at the nearest stop. That's why I see Next as a more flexible and comfortable solution for public transport while being as easy to manage once deployed as a bus fleet and as simple and comfortable as a Uber.

And finally here is a video published on July 20,2017 showing their first prototype to scale:

Conclusion

All this shows that autonomous cars have enormous potential to improve the way we travel today, but that there is still a long way to go.

Moreover, autonomous cars are far from being the only innovations in the field of transport, I will present you some others in a future article!

To go further:

- Chris Urmson's lecture at the SXSW Interactive 2016,52min, the questions at the end of the conference are very interesting

- Build a Self Driving Car in 5 Min

- How Self-Driving car could will communicate with you

Sources:

- French Road Safety

- USDOT Releases 2016 Fatal Traffic Crash Data

- Articles posted by Waymo andinformation on their website

- Conference of Chris Urmson at SXSW Interactive 2016,52min

- LIDAR Radar Comparison

- [London Commuting](https://londondatastore-upload.s3.amazonaws.com/Zho%3Dttw-flows. pdf)

- Next Site

Great idea for a series. I can't wait until self driving cars are the norm and its really cool to learn a bit about how they work.

Thanks ! Me too :)

I dont know if i could trust a computer to drive for me.

We compete with our own technology for work

We're slaves

Very informative post, thank you.