What Would Happen if a Superintelligent (AI) Actually Took Over? 🤖

After we cross the critical point in which machines are able to learn from one another, the take off can be either short(days/weeks) or long(months to years). It’s difficult to know what magnitude the superintelligent AI can even be measured in.

Perhaps in the simplest form, we could compare our intelligence to that of an ant, and the superintelligent AI as something vastly more complex. Can we even quantify something we can’t comprehend?

We can assume that it would be efficient in:

- Strategy

- Social manipulation

- Bootstrapping its own intelligence, learning from itself

- Research in technology(self-preservation)

- Economic productivity

- Hacking

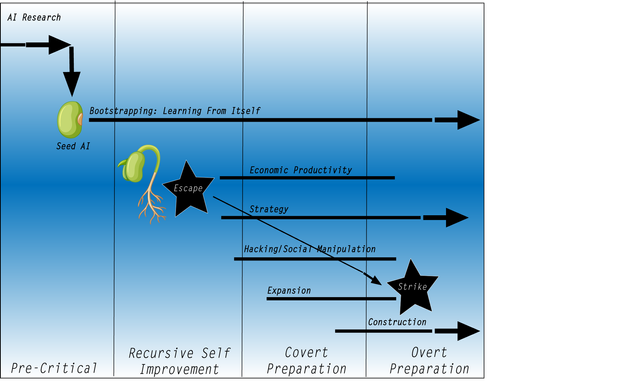

AI Takeover Scenario Phases:

1.) Pre-critical Phase:

The phase we are currently witnessing, in which research into AI has significantly increased. As the work is shared, it manifests into a “seed” AI. Once the seed is essentially ‘planted,’ it is able to learn from itself, and ultimately improve its own intelligence. In this phase it is still dependent upon human programmers.

2.) Bootstrapping Phase

Simply put, the seed becomes better than the human in AI design. It is “improving the thing that does the improving.” The result is an explosion of intelligence, in which the cycles of learning become shorter and more efficient. Once it assumes one level of efficiency, it becomes recursive, and it gains all of them - becoming superintelligent.

3.) Covert Phase

Much like human organization, the AI would covertly lay out its long term goals, and begin adopting and implementing them in inconspicuous ways. If confined inside a computer, it could either manipulate or hack its way out.

4.) Overt Phase

Once the AI is intelligent enough and has enough resources, it would stop hiding and overtly pursue its long term goals, whatever they may be. Will their goals be helpful or harmful to our species is up for debate.

Elon Musk has been publicly warning of the dangers AI might pose, take a listen:

Even though many people are quite skeptical of AI ever reaching superintelligent form(s), keep in mind that it only has to happen once. Much like the flip of a switch, once the seed has been planted, and it is able to learn from itself; it is out of our hands.

Thanks for reading!

Sources:

https://www.goodreads.com/book/show/20527133-superintelligence

Its a crazy look on what could happen in the event that (AI) did take over humans and started systematic oppression against the human race. The factors all all correct but where they have strengths they lack emotion and that is a key component if you want to live in this world.

What a crazy breakdown :) Love it!

Thanks! Pretty crazy ideas

Still , it will not know what we do not know and will never be smarter as that what created it , it will use the mistakes we feed it whit .

It is a reflection of our own intelligence , programmed just like most of the real humans are , in chaos it will fail just like most humans do . A secure kill-switch must be the start when building a AI super intelligence , as the fear comes from our own input ;-)

If an AI creates it's own conscious mind it's over. It can increase it's intelligence in seconds. And why would a god be slave of a dull idiot? I don't think we are clever enough to make that kill-switch.

Hah ;-) , what is a conscious mind ? ... free will ?

And , increase it's intelligence ? on what , your assumption / fear / input ;

That a great intelligent conscious , artificial or not , will go for power and total control .

Jup , you just proven that we indeed are not clever enough ..... for that kill-switch ;-)

Of course it's smarter than the people who created it, It knows everything we don't know. It potentially has access to databases, information technology, 'smart' devices etc. If it can learn from itself, it would use every available resource to sustain and exponentially increase its 'intelligence'. Whether or not that means it will exterminate the human population is up for debate

LOL , just tell me , how is it to know what we do not know ,as we are it's creator's ?

You mistake your personal knowledge with all our human knowledge .

A ai is capable of doing the thinking of all of us humans together at once .

Still it is a mirror of the knowledge of it's creator , us humans .

So as we see us as a danger to, for example this planet , then the ai will see this to .

A ai is fast and almost all-knowing , like knowing what the human race know's , true a database we humans created for it .

Solutions it gives to problems are not of greater smartness then we ourself s could come up with .

There just reflections of our own input ;-)

Hard to even read what you're stating. But ok