Zram and Zswap - The Full Rundown

It's no secret that witness nodes require an ever growing amount of memory to function properly. The issue is far worse for full node, which requires far more memory. A consensus node (what most witnesses use) require the absolute minimum of 26GB at the time of writing and it is rapidly expanding. A standard setup on a 32GB server won't be enough without memory compression in the very near future.

This is not a guide to configure Zram or Zswap on your server. Rather this is an educational writing to enlighten everyone on the great benefits and potential drawbacks of memory compression on Linux systems. The information presented is meant to apply to any server owner but the targeted audience is for witnesses to delay the inevitable upgrade of their servers.

What is Zram and Zswap?

Zram and Zswap provide memory compression for linux based systems allowing you to put more data in your RAM at the expense of increasing the CPU usage on your server. Neither option provides filesystem page compression. Both options ship with the Linux kernel by default for most distributions.

Disclaimer: Neither option provides filesystem page compression. Any pages swapped to a standard disk (or another swap device) will not be compressed.

What are the differences?

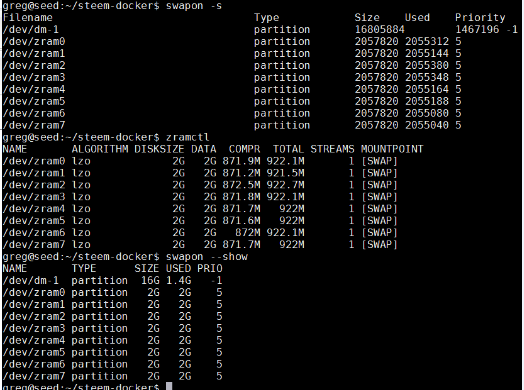

Zram works as a swap device in RAM. It is a bit more complicated to setup but flexible. When Linux decides it needs to start swapping, Zram will compress the page and store it in RAM, allowing you to keep your data in RAM, but appear as though it is in "swap". Zram needs to be set to a higher priority over all other swap devices to avoid using regular swap devices on disk.

Zswap works a bit differently from Zram. Rather than creating a separate swap device it works in conjunction with your current swap. This will efficiently utilize your disk swap reducing system I/O. For the scope of this article, I will not be discussing the z3pool types.

What happens if the memory is completely full?

If Zram or Zswap can no longer fit any more compressed data in RAM, it will swap to a standard swap device on disk (assuming you have one configured). If your RAM and swap are full then processes will start to be killed, at that point you have exceeded the lifespan of your server and it's time to upgrade.

Which do I pick?

It depends on your system configuration. If you have or need a swap disk then use Zswap as it doesn't suffer from LRU inversion (more on that later). Zswap will efficiently utilize your swap with the in-memory compressed cache reducing IO on your disks and in memory. For standard server owners, this would be the one to pick.

Zram is best suited for servers that do not have a swap device. It does have the pitfall of LRU inversion. LRU is an abbreviation for "least recently used". It is possible for new pages to go straight to disk swap and leave old pages in memory, which can greatly increase IO for disks and memory.

Disclaimer: I do not (and will not) be using Zswap. Theoretically, since it's a memory mapped file, Zswap should be effective but this has not been tested in a live system and it's best to keep everything in RAM if you can!

Compression

There's an option to use lz0 or lz4 compression algorithms for either Zram or Zswap. lz0 promises better compression and lz4 promises better speeds.

Zram and Zswap can be configured to use lz0 and lz4. lz4 promises better decompression speeds

Compressing the shared memory file

For these tests, I copied over a live shared_memory.bin file for testing to get a ballpark estimate of what will happen in memory. I did not change any of the compression settings so everything will remain the default. Each test was ran three times and were averaged at the end. The times didn't fluctuate much as expected on a system with hardly any load on the processor.

I ran these tests on an Intel Xeon CPU E3-1270 v3 @ 3.50GHz using Arch Linux with the meltdown and spectre patches applied (which further slow down the system). To get accurate time results for your system, you need to run the commands to compress and decompress the shared memory file.

| stat | original | lz0 | lz4 |

|---|---|---|---|

| size on disk | 26 GB | 10.4 GB | 11 GB |

| compression time | - | 2 minutes 7 seconds | 2 minutes 30 seconds |

| decompression time | - | 2 minutes 13 seconds | 2 minutes 0 seconds |

The results are promising! While the size and compression are similar, lz0 won in both total speed and size. Overall, the data was cut in less than half, which is perfect! These tests were ran on a single core rather than multiple cores. Zram is capable of taking advantage of multi-core systems, so throughput will be even higher ;)

Conclusion

Memory compression will certainly delay the inevitable RAM requirements of your server. It will be possible to fit a node in a server with as little as 16GB of RAM for the time being. Though, I'm not sure how long that will after you take into consideration Steem's massive growth (which is wonderful). The drawback of CPU will have to be taking into consideration, but for most servers this shouldn't be an issue as the algorithms used are designed for speed.

For server owners not running a node, you'll most likely want Zswap. Make sure to check with your system to see what's right for you.

Further reading

Let's make Steem great, together!

Every vote counts!

Click here to vote @samrg472 for witness or go to https://steemit.com/~witnesses

Check out Steemdunk

Steemdunk is a curation platform for voting on your favorite authors, designed with reliability and ease of use. Visit our community on Discord

Genius work again Sir Sam, truly impressive and another amazing contribution that will hopefully help save people from having to upgrade soon than they really need to.

Man where do you people live .....you people are totally awesome....having so much knowledge i wud have been a proffesor by now.....

Nice work....

This post has received gratitude of 3.27 % from @appreciator thanks to: @samrg472.

Nice.

Want to ask for his advice, let his steemit account grow like you.

Good work!

Great to know!

You got a 4.15% upvote from @postpromoter courtesy of @samrg472!

Want to promote your posts too? Check out the Steem Bot Tracker website for more info. If you would like to support the development of @postpromoter and the bot tracker please vote for @yabapmatt for witness!

Great stuff, thanks! By the way, I like the steemit gif!

This is the bomb! I have a seed node running at home right now. I am waiting on two 3.16 GHz CPUs to upgrade from a pair of 2.0 Then I can take it downtown and rack it with my Witness server running a full 32 GB

The server only shows 25 GB ram and so following your post about ZRAM I was able to deploy the seed server with the available ram/

Thank you so much for this

When I run top it shows that my container is only running 49% of available ram.

ZRAM is a cash saver and a real work horse.

Thanks again.

Thanks! I'm glad you found it useful :)

I also filled my 30th witness vote slot with @samrg472

You earned it.

Thank Samuel good work