Simulating a Steem curation rewards distribution that is modeled after a 2nd price auction

This post describes some simulated results from a curation distribution algorithm that was inspired by the concept of a second-price auction.

Introduction

pixabay license: source

pixabay license: source

Some time ago, I had a conversation with @personz about the desirability of self-voting. I hope I'm not mis-characterizing the argument*, but as I recall, the position that @personz took was that self-voting conveys no information to the blockchain, so it should be considered equivalent to noise or spam. This led to the conclusion that all authors should abstain from self-voting.

(* - Clarification: "argument" as in "logical argument". The conversation was not at all confrontational.)

On the other hand, my position was that voting can be abused, whether by self or others, and the self-vote is a perfectly valid form of expression, provided that it's sincerely given in reflection of the merit of the content. In my own view, self-voting is ok, but it doesn't absolve the voter of the obligation to put an honest appraisal on the value of one's own post.

Of course, this is a long-standing topic of conversation among Steemizens, and I'm not trying to reopen the debate in this post, but I'd like to revisit that conversation in another context.

While we were talking, I said that it reminded me of the karate tournaments that @cmp2020 used to attend. In those tournaments, 5 judges would submit their scores, the lowest and highest scores would be thrown out, and the contestant would receive the average of the scores from the remaining 3 judges. This methodology eliminated outliers whose votes were wildly out of step with the judging consensus. I said I wondered if something similar could mitigate the harm from abusive votes (by self and others) in the Steem curation consensus.

Either during that conversation, or thinking about the karate tournaments & Steem voting shortly afterwards, I was reminded of an article that I had read some time before which indicated that Google had done some extensive game theory research with the problem of fair ranking in its Ad Words product, and the best solution they found was the 2nd price auction.

And there things stood. I have mentioned in a number of comments since that time that I thought it might be a good idea to explore this concept, but basically left it to others to figure out what a voting algorithm based upon a second price auction would even look like. Of course, this guaranteed that the idea would be ignored.

So, last week, I finally thought that I would go ahead and write a rewards simulator to see how a voting algorithm could even be modeled after the concept of a second price auction. Here's what I found.

What is a second price auction?

Simply put, in the second price auction, the winner of the auction is the person that bids the most, but the price they pay is not the price that they bid. Instead, the price they pay is the 2nd highest bid that was submitted.

As I recollect, the reason that Google chose this algorithm for their Ad Words product, is because of a convenient mathematical property, stated here on gametheory.net:

The theoretical nicety of second price auctions, first pointed out by William Vickrey, is that bidding one's true value is a dominant strategy

So the question is, can we find a curation rewards calculation that acts in similar fashion to the karate tournament scoring by weeding out outliers and like a 2nd-price auction by incentivizing voters to vote correctly (according to their own perceptions)?

How does this apply to Steem voting?

After commenting repeatedly that I wonder if there is a solution to Steem's curation problem in an algorithm that's modeled after a second price auction, I decided that I should take the idea past "hand-waving." In this context, what does "modeled after" even mean?

So last week, I wrote a shell script to loosely simulate basic curation rewards on a Steem post. (It ignores the reverse auction for early voting and negative voting) Once I had it so that it seemed to be close to matching actual payouts, I made a modified version that would accept the current voting information and calculate rewards as-if generated through a second price auction inspired algorithm.

In this version, the voting is all the same, but the rshares from the highest voter get thrown back into the rewards pool. The payouts are then calculated based on the total after those rshares get subtracted. This means that the highest and earliest voters still win the distribution, but the reward payment amounts are made as if the highest voter hadn't voted. Each voter gets credit for the rshares from the next-highest voter, with the exception of the lowest voter (in rshares), who gets credit for their own rshares - unless they're the only voter, then they get 0. (yeah, that seems like a kludge, but I just wanted to "sketch" the idea out.)

Using that script, here are the top 10 potential curation rewards on one of today's trending posts:

R E W A R D S

current | simulated

---------- ----------

21.187692 | 3.132037

11.524 | 10.155

11.08 | 16.51

10.0770 | 9.67429

6.268 | 5.795

2.5009 | 2.0895

1.190127 | 0.836698

0.461401 | 0.194006

0.447819 | 0.295523

0.2631 | 0.1247

And here is the same information from a post with a high amount of self-voting:

R E W A R D S

current | simulated

---------- ----------

5.665636 | 0.274980

1. | 0.3

0.505638 | 0.046393

0.309271 | 0.091192

0.148121 | 0.160000

0.147017 | 0.051299

0.069935 | 0.030932

0.0494 | 0.0188

0.039489 | 0.015661

0.036847 | 0.014599

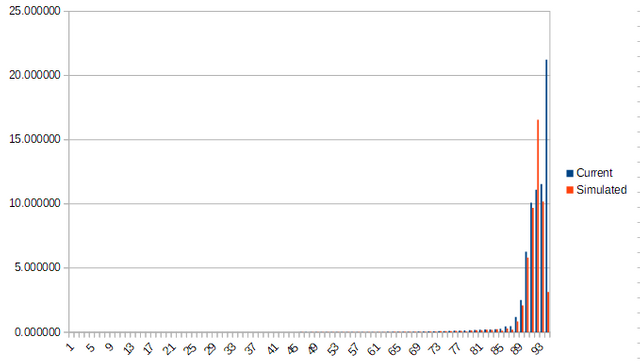

From the same trending post, here is a graph of current and simulated rewards, sorted by highest payout under the actual algorithm.

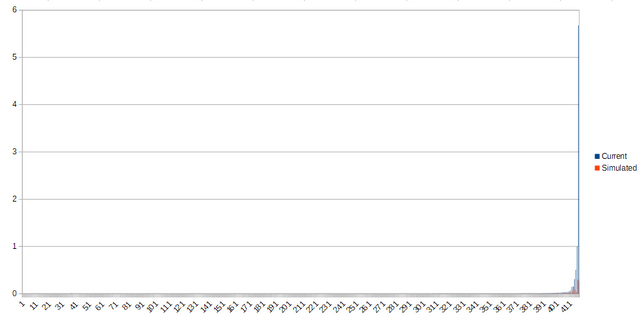

And here is a similar graph from the post with a high self-vote.

As you can see, in both posts, the outlier votes are drastically reduced, but voters who are inside the consensus range don't change as much. By punishing the outlier like that, it creates an incentive for a voter not to vote too far away from other voters - you know that even if you "win", your rewards are being calculated from someone else's vote, and it also creates a requirement that a post must get at least 2 votes to receive a payout.

Discussion

Of course, the simulator hasn't been validated and it can't predict how people's behavior would change in response to the methodological change. It also doesn't account for all the rshares getting put back into the reward pool. But, in order to "game" this algorithm, the abusive voter would need to divide their stake into 2 or more separate accounts, and they would have to be willing to sacrifice all of the rshares from one account. So while it may not be perfect, it seems that it may create a disincentive against abusive voting, whether by self or others.

It is also interesting that Steemit proposed the convergent linear solution in the days after I wrote this script. Since that proposal creates an incentive not to split one's stake, it might be interesting to see how these two proposals interact with each other.

I don't claim that this is a final answer to Steem's problem with inaccurate post ranking, but I do think that it may be worthy of further consideration.

Addendum: Here is the original conversation, in this post by @rycharde. Looks like I was mistaken to think that it first came up in our conversations about self-voting..

Thank you for your time and attention.

As a general rule, I up-vote comments that demonstrate "proof of reading".

Steve Palmer is an IT professional with three decades of professional experience in data communications and information systems. He holds a bachelor's degree in mathematics, a master's degree in computer science, and a master's degree in information systems and technology management. He has been awarded 3 US patents.

Steve is also a co-founder of the Steem's Best Classical Music Facebook page, and the @classical-music steemit curation account.

Follow in RSS: @remlaps, @remlaps-lite

Another possible consequence of this model is to incentivize last minute voting. Just wait until the last moment before the voting period and vote with a strenght just below the highest one as opossed to the current state of affairs where you are incentivized to vote around the 15 minute mark.

There are different ways you could implement it, but the way I did it still uses time of vote in determining rewards. Voters get credit for their own voting time with the next lower rshares, and it uses the same square root weighting as today. So early voters would still get the lion's share of the rewards. It probably wouldn't make sense to wait 'til the end.

You're right about the possibility of splitting one stake into multiple accounts. I mentioned that in the article. But, if you have a small number of accounts, you have to sacrifice a large percentage of rshares. If you have a large number of accounts, there's less of a built-in penalty, but it's easier for others to find it and downvote it. (and someone would have to decide if the largest downvote is also going to be ignored as an outlier. I did not include downvotes in the simulation.)

From a game theory perspective we probably need to formalize the model with a payoff matrix to see how different strategies playout. Although with the complexaties of the steem reward pool it's an ardous task.

I guess the thing to do is take a careful look at the paper with the Steem voting analysis, from my post the other day, as well as the original 2nd-price auction analysis by William Vickrey that gametheory.net references, and try to combine the methods for an analysis of 2nd-price inspired voting.

Probably not an effort that I'll have time for in the near future, though.

This is slightly off topic but Derek from Veritasium uploaded a video today that touches how Youtube ranks the content using it's algorithm, I find it interesting.

Actually it's somewhat on topic because Youtube utilizes it's algorithm to curate content for it's users.

Thanks. Looks interesting. I'll try to find time to watch it later today.

Just watched it. That was interesting. Thanks for the link.

Depressing points about the click-baity titles and clickable subjects, but I think he's right. It may not apply quite as much here since our feeds still follow the subscription model, and since trending posts rely on whale-votes or bidbots. But I'm sure clickable subjects and click-baity titles probably help a lot here, too.

Speaking of algorithms, have you checked Steeve? According to the app it uses AI to curate Steem content although I haven't looked much into how it works.

I tried it a while ago. Not recently, though. I like the concept, but I've been using steempeak, almost exclusively, 'cause I really like the grid view, and the split-screen editor. I guess for half-a-year or more. I check in on busy every once in a while, and I should probably also get in the habit of checking in on steeve periodically too, to see how it is progressing.

I guess clickable topics and click-baity titles would be more important there....

I think that a 2nd price auction model would incentivize splitting the stake of high powered accounts:

Let's say that my account can vote with a strenght of 2000 rshares and there is a post with 2 votes worth 100 rshares. I am incentivized to vote with the same 100 rshare strength. Otherwise my vote becomes an outlier if I vote above or below the current distribution.

If my goal is to maximize my curation rewards it is better for me to split my stake into 20 accounts and vote with an equal amount of voting power with all the accounts on the same post. Or I could split my account in 4 and vote with a strenght of 500 each and turn the other votes into outliers.

Or even better create a high number of accounts with a moderate amount of voting power and turn everyone else into outliers.