Precision computer simulations for particle colliders - a window on my research

Last week, I released a new scientific article on a topic that I work on since 2013. Six years of work summarised in 70 pages are now (freely) available from here.

This piece of work, addressing computer simulations, required a lot of efforts and I am happy to explain here why it is relevant and what this will change for the high-energy physics community.

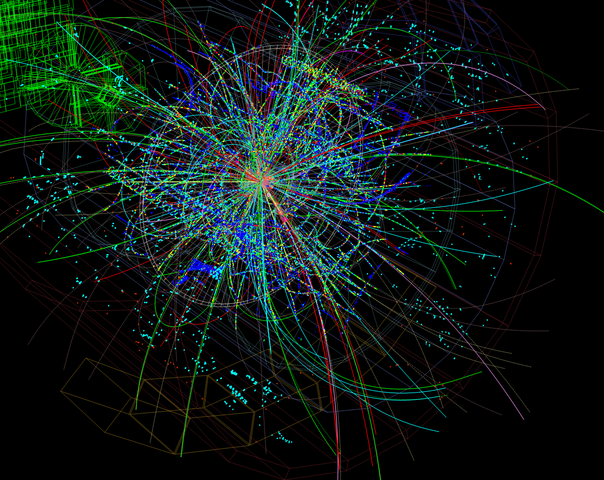

[image credits: Free-Photos (Pixabay) ]

Particle physicists dealing with collider studies, such as at those at the CERN Large Hadron Collider (the LHC) or any future project like the 100km-long FCC collider project, need to simulate billions of collisions on computers to understand what is going on.

This holds both for the background of the Standard Model and for any potential signal of a new phenomenon.

Tremendous efforts have been made for what concerns the former. While the Standard Model of particle physics is a well established theory, we still want to test it at its deepest level. To this aim, we need extremely accurate predictions to verify that there is not any deviation when the theory is compared with the experiment.

Developments of the last couple of decades have allowed to reach this goal. Any process of the Standard Model (i.e. the production of several known particles at colliders like the LHC) can today be simulated with an impressive precision.

On different grounds, this also means that the background to any potentially observable new phenomenon is well controlled. In contrast, simulations of any signal of a new phenomenon are usually much cruder.

This is the problem that is tackled in my research article: simulations describing any process in any model (extending the Standard Model) can now be systematically and automatically achieved at a good level of precision.

Quantum field theory in a nutshell

The calculation of any quantity observable at colliders typically requires the numerical evaluation of complex integrals. Such integrals represent the sum of all the ways to produce a given set of particles. They can for instance be produced with one of them recoiling against all the others or with a pair of them being back-to-back. Every single available option is included in the integral.

[image credits: Pcharito (CC BY-SA 3.0) ]

One element of the calculation indicates how the particle physics model allows for the production of the final-state particles of interests.

This element is hence highly model-dependent: if one changes the model, one changes the manner to produce a specific final-state system.

Moreover, there also is some process-dependence: if one considers other particles to be produced, it is simply a different calculation.

This is where an automated and systematic approach wins: it works regardless the process and regardless the model. The only thing the physicist has to do is to plug in the model of his/her dream, and the process he/she is interested in.

Such an option actually exists for many years, as can be read in this article that I wrote already 10 years ago. The difference with respect to the article of last week lies in a single keyword: precision (in contrast to crude predictions as in my old article of 2009).

Precision predictions for new phenomena

Let’s go back for a minute to this model-dependent and process-dependent piece of the calculation. Its evaluation relies on perturbation theory, so that its analytical form can be organised as an infinite sum. Each term of the sum depends on a given power in a small parameter called as: the first component is proportional to as, the second to as2, and so on.

[image credits: Wolfgang Beyer (CC BY-SA 3.0) ]

Solely computing the first component of the series allows one to get the order of magnitude of the full result, with a huge error resulting from the truncation of the series to this leading-order piece.

The second component contributes as a small correction, but at the same time allows for a significant reduction of the uncertainties. This next-to-leading-order prediction is therefore more precise.

And so on… Higher orders lead smaller and smaller contributions together with a more and more precise outcome.

The problem is that whilst the leading-order piece is something fairly easy to calculate, the next-to-leading-order one starts to be tough and the next ones are even harder. The reason lies in infinities appearing all over the place and canceling each other. However, at the numerical level, dealing with infinities is an issue so that appropriate methods need to be designed.

My new research article provided the last missing ingredients to deal with next-to-leading order calculations of any process in any theory can now be achieved systematically and automatically.

An example to finish and conclude

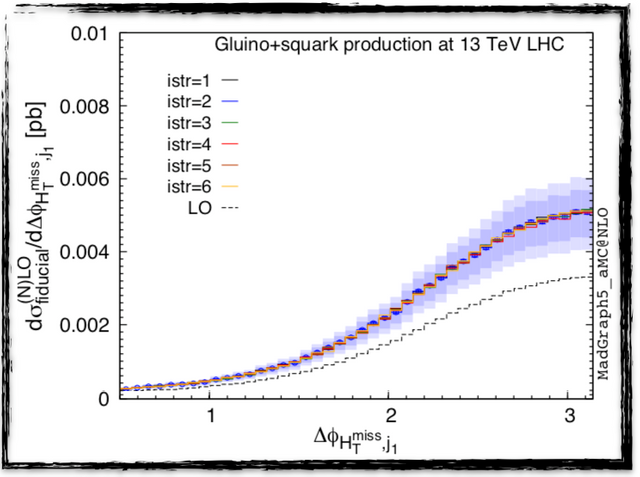

I present below the distribution of a physical quantity relevant for the searches of hypothetical particles called quarks and gluinos at the LHC (this is the x-axis). The y-axis shows the rate of new phenomena for given values of the physical quantity.

[image credits: arXiv ]

The black dashed curve consists in the leading-order prediction (we truncate the series to its first component) whilst the solid red line in the middle of the blue band consists in the next-to-leading order result (we truncate the series to the sum of its first and second components). The impact of the next-to-leading-order correction is clearly visible when comparing the dashed black line with the solid red line: there is a large shift in the predictions.

The uncertainties attached with the leading order curve are not shown as very large. The next-to-leading order ones represented by the blue bands. The improvement is described in the research article itself: we move from a 30%-45% level of precision (at leading order) to a 20% level of precision (at the next-to-leading order).

To summarise, new phenomena searched for at colliders can now be precisely, systematically and automatically computed, which is crucial for our understanding of any potential excess that could be found in data in the up-coming years. Moreover, all codes that have been developed are public, open source, and are efficient enough to run on standard laptops.

SteemSTEM

SteemSTEM aims to make Steem a better place for Science, Technology, Engineering and Mathematics (STEM) and to build a science communication platform on Steem.

Make sure to follow SteemSTEM on steemstem.io, Steemit, Facebook, Twitter and Instagram to always be up-to-date on our latest news and ideas. Please also consider to support the project by supporting our witness (@stem.witness) or by delegating to @steemstem for a ROI of 65% of our curation rewards (quick delegation links: 50SP | 100SP | 500SP | 1000SP | 5000SP | 10000SP).

I never took calculus and only took an introductory course in physics, and yet I can appreciate your accomplishment. You have systematized predictions so that certainty (not absolute) can be dramatically increased. Or am I mistaken?

Wow! I know you don't get rewarded financially commensurate with what you are contributing to science--contributing to all of us. But what it must feel like to achieve such a thing.

I was reading a few nights ago about Keppler...actually, Galileo, Keppler and Tycho Brahe. As read I about their uneven steps toward calculating planetary distances and rotations, I thought of you and your place on the continuum of science.

Thank you for what you do and for sharing your insights with people like me. I feel like more than a passive witness to human progress.

I will try to read your paper, though I'm not sure I will understand much of it. But, you never know...

Thanks for passing by and writing such a nice comment.

This is a correct one-sentence summary: we (I was not alone in this adventure) added new ingredients to an existing code so that predictions for any process could be achieved systematically. Moreover, these predictions are accompanied with small error bars (in particle physics, we also have theory error bars).

Ohhh I should not be compared with those people. Definitely not. I am relatively no one, and they are big names and key scientists of our world!

Feel free too and do not hesitate to ask me questions! I will be more than happy to answer (also on discord).

Your modesty is becoming, however...measurement was at the heart of Kepler's contributions (if I understand this correctly)

And I remember reading about Marie Curie, and how she painstakingly measured minute quantities of pitchblende in her radium studies.

The importance of accurate measurement cannot be overstated. So, yes, you've put another link in the chain that reaches back to first inquiring ancients who looked up into the skies. Advancing wonderment to understanding required measurement, accurate measurement. Forgive me for being impressed by your work :)

I will read that paper and ask questions if I understand enough to do that :))

Well, I cannot just let anyone compare me with those big names. Really. But it is appreciated.

However, one important point is that my work addresses accurate theoretical predictions, because in particle physics, theory predictions also come with an error bar (as one always needs to approximate the full calculation that cannot be done exactly).

Please do so!

well, if you go back far enough, further than galileo even, the alchemists of old did nothing but empirical research, would have been quacks now but in essence went over whole combinations to gather datasets, including some of my personal heroes like Theo Paracelsus they were very very early pioneers ... in chemistry ... in biology, in lots of things :)

Theo Paracelsus. I have to look that up.

Measuring and collecting data...it doesn't seem glamorous, but is the heart of good research.

Thanks for your comment.

@lemouth :

Is this link correct? First of all, it is not 70 pages. It is only 26 pages. Secondly, this is some other Benjamin in the authorship. I am sure this is a typo.

this is the correct link I guess: https://arxiv.org/pdf/1907.04898.pdf

Indeed, this was a typo and I have now fixed it. The initial ink was totally wrong...

Thanks for pointing it out (and I am also happy to know that at least one person tried the link :D). This is fixed all over the article now.

I was curious since it was about simulations. :)

Curiosity is a nice quality ^^

Hello, sensei, sashi bu ri ... (<-rudyardcatling but that one is always short of rc lately) ...

I think i'll put this aside for the morning coffee and put my smart-people hat on before i try to read it :D

I guess (on a scan of the post) all systems suffer from some kind of butterfly-effect and the more complex they get the harder it might be to discern but the more impact a very small notch might have in the long run (and ever-increasing to afterwards ofcourse). I'm still not sure how analogue life can be placed in digital computation, so it must be an approximation at the highest possible resolution (or else the whole universe is not analogue but actual ones and zeroes, which i think is somewhat of a scary concept lol) So you could in essence keep on increasing the accuracy but mathematically between any two given points you always have an infinite number of points so its a bit of a never-ending task you got there ... im gonna try to read the paper but my school-vocabulary isnt all that, long time, hope you're doing well, always a pleasure !!

... good morning then again , i'd say ...

if every physical question can be answered by the theory, then the theory is said to be complete ...

has been proven to be anextremely successful theory ... * its predictions agree well with the vast majority of the datacollected so far* ... Despite its success, however, the SMleaves some deep questions unanswered, and suffers from various conceptual issues and limitations .... truncated ... normalization :D when working with soundwaves that basically means clipping off the outer edges, effectively losing a lot of the original data ... a 30%-45% level of precision ...

It's about probability is it , no one can really say it's "like that" ... if i didnt know any better i'd say the atheists are living on a prayer lol (sorry, you know i respect all the work and i'm a great fan of gravity, entropy and equilibrium) but it often baffles me how much "uncertainty" there is compared to what is accepted to be , with 95% of the universe not having been proven to exist you sure are frontier workers :D that document's gonna take me a while to digest, sensei, i might be back with questions, thank for the post

Hey! Nice to hear from you from this alternative account. I am happy to see that you are still around.

Maybe one thing you could do, instead of trying to read the entire paper, would be to focus on the introduction where we present the problem we are trying to solve and explain why they are important for our field. What do you think?

The Standard Model is not complete. Even when Jay Wacker says that the Standard Model of the 1990s is complete, I disagree with the statement. There are still many quantities of the Standard Model of the 1990s that have not been measured, and that we won't be able to measure before at least 50 years. So... until one gets there, there is still work to do, IMO.

In particle physics, every theory prediction comes with an error bar, so that the central value is what it is, but it won't be shocking if the right answer would be elsewhere in the error bar.

I hope I clarified a little bit :)

ofcourse :) - i don't think one will simply get there ... once the key Kolwynia has been found, then all is said and done after all (it's a reference to jack of shadows/Roger Zelazny). Unless the universe is digital or mathematics is wrong as a language at the very core then it's virtually impossible to actually ever get there, one could only hope to get close , around somewhere , right ?

You can't really box analogue strings like that, be they soundwaves or any waves, there's always a higher resolution so yea i understand you go for the most likely part of the dataset that has the biggest ... how should i say in my own cat-language ... "density" ? the part where most data would reside as closer to ones than zeroes because that is more like the direction , it's vectors, right ? not a square spreadsheet ... quantum mechanics is fascinating matter indeed as the pun goes, but i have to admit after reading three of the pages i still have to decipher the first word into human language because it seems to be some kind of alien manuscript brought back by the mars rover so far :p but i'm sure some things will dawn as they always do, and once there is a hook to attach to things become clearer

(so i'm probably speaking in tongues to you too, but i'm not, am i ... its just like a way of looking at it from another dimension) i do understand most of the core , its the numbers in your field that are just too much for me as i never really got trained into that and i doubt i still will, unless i'd dedicate my last good 20 years to it ... but i got much life to catch up on , ANYWAY ! i'm gonna keep at it until i get the root of it at least, but i think your advise might be wise

(as usual, that's why you are the sensei ;-)

@lemouth

if i may add, in my semi-mystical viewpoint, living in the metaverse where the first one to explain entropy to me as a kid was Jack Vance in the dying earth ( :) ) ... You and yours are much like the celestial bodies "falling" around the center, trying to reach it but never getting really there" , yet nothing stops searching, it's like the whole universe is actively seeking like euh

the truth ?

the center ... balance ? but if it ever did find that point then all would stop, right ? nothing would move and for lack of friction would there still be anything left as there would be like no energy ?

lol, maybe i should start my own cult :D always a pleasure, sensei !!

< [...] one could only hope to get close , around somewhere , right ?

Not even that: we solely can get closer, not more.

From your message, it is not clear. But have you tried to read the actual article? Trying to get the intro would be a nice goal :)

i have, and i still have it in a tab, but as opposed to most other articles or newsletters it doesn't seem to be something i can just read in-between like i usually do when i switch screens or tabs heh , i will certainly try to at least get the notion, you know i think it's fascinating matter can't get more cutting edge than looking for the missing 95% of existence imo :-) so far i can deduce it's all about error margins and getting a usable set out of a whole cloud of unstructured data, but i have to admit it's a bit above my braingrade, maybe not the concepts, but the language used is very technical and probably since it's mainly directed at people who are on it on the daily, nonetheless ... i'm not done with it yet :p

I just downloaded the research work. My first view into the material, I saw supersymmetric Lagrangian... I hope I'd be able to understand it.

I guess I'll take time to read it. Thanks for sharing sir

The equations are rather technical. In order to understand this, do you know what are the spin-statistics theorem and Noether theorem? If yes, if you additional have some quantum field theory basics, I can point you to some reference (I know, this consists in a lot of requirement ;) ).

Sincerely, I neither know about the spin-statistics theorem nor the Noether theorem, but now that you have mentioned it, I can read up a few things about it

it is very hard to explain this because as this is quite advanced. Without prerequisite, it may be hardish. However, reading the introduction of my article and understanding it may be a nice target :)

Thanks for sharing education bro.

Thanks for the interesting post and research link! I was also confused by the arXiv link to a black hole article, but the corrected link in the comments cleared it up. I included a link to your post in my recent article, Science and technology micro-summaries for July 22, 2019 and set a beneficiary to direct 5% of that post's rewards to you.

Thanks a lot for featuring my article!

Is there still any issue with the links (I thought I fixed them all within the post)?

You're welcome. I think you're right, and the links are all fixed now. It was Sunday morning when I read it, so they hadn't been updated yet. I was about to comment at the time, but I refreshed the post and saw that someone else had already brought it to your attention.

Thanks in any case! This was a stupid copy pasting error (I am reading so many articles on the arxiv, so that it is so easy to copy-paste the wrong identifier... ;) )

Hi @lemouth!

Your post was upvoted by Utopian.io in cooperation with @steemstem - supporting knowledge, innovation and technological advancement on the Steem Blockchain.

Contribute to Open Source with utopian.io

Learn how to contribute on our website and join the new open source economy.

Want to chat? Join the Utopian Community on Discord https://discord.gg/h52nFrV

Congrats !

Thanks ^^

Dear STEM Geek! I am writing this comment to inform you that the leader of our tribe, @themarkymark, has unfairly banned me from the discord server of our tribe. Show him we are a decentralized community and that such acts of tyranny will not be accepted in the STEM tribe! Tell him to let me in again!

Thank you!